I've been playing around with Web API a lot recently, and I've found that it's a really powerful and elegant way to create internet-based applications. After writing several server-side Web API applications, I thought that it would be fun to write a Windows Phone 8 application that used Web API to communicate with one of my server-side applications.

I was using the Visual Studio 2013 Preview to write my Web API application, and by default Visual Studio 2012 and later use IIS Express for the development web server on http://localhost with a random port. With this in mind, I thought that it would be trivial to create a Windows Phone 8 application that would be able to send HTTP GET requests to http://localhost to download data. This seemed like an easy thing to do, but it turned out to be considerably more difficult than I had assumed, so I thought that I would dedicate a blog post to getting this scenario to work.

I should point out that I stumbled across the following article while I was getting my environment up and working, but that article had a few steps that didn't apply to my environment, and there were a few things that were required for my environment that were missing from the article:

http://msdn.microsoft.com/en-us/library/windowsphone/develop/jj684580.aspx

In addition, there were a few things that I needed that might not apply to everyone, and I'll try to point those out as I go along.

System Requirements for Windows Phone SDK 8.0

First things first, you need to make sure that you have a physical computer that meets the system requirements for the Windows Phone 8 SDK that are posted at the following URL:

http://www.microsoft.com/en-us/download/details.aspx?id=35471

Here is an annotated copy of the system requirements list at the time that I wrote this blog:

- Supported Operating Systems:

- Operating System Type:

- Windows 8 64-bit (x64) client versions

- Note: You cannot use Windows 8 32-bit (x86) client versions

- Hardware:

- 6.5 GB of free hard disk space

- 4 GB RAM

- 64-bit (x64) CPU

- Windows Phone 8 Emulator:

- Windows 8 Pro edition or greater

- Requires a processor that supports Second Level Address Translation (SLAT)

- Note: The Windows Phone 8 Emulator runs in Hyper-V, so you cannot use a Hyper-V virtual machine for the host computer where you install the Windows Phone 8 SDK

- Additional Notes:

- If your computer meets the hardware and operating system requirements, but does not meet the requirements for the Windows Phone 8 Emulator, the Windows Phone SDK 8.0 will install and run. However, the Windows Phone 8 Emulator will not function and you will not be able to deploy or test apps on the Windows Phone 8 Emulator.

You Cannot use "Localhost" for Testing

The first time that I launched my Web API application and tried to connect to it from the Windows Phone 8 Emulator (WP8E), I used "http://localhost" as the domain where my Web API application was hosted. This was a silly mistake on my part; the WP8E runs in Hyper-V, and the WP8E believes that it is a separate computing device than your host computer, so using "http://localhost" in the WP8E meant that it was trying to browse to itself, not the host machine. (Duh.)

Your Host Computer Must Not Use IPSEC

My computer was joined to a domain, and our domain uses IP Security (IPSEC) for obvious reasons. That being said, the Windows Phone 8 Emulator is not going to use IPSEC to connect to the host machine, so I needed to find a way around IPSEC.

Our IT department allows us to make a domain-joined machine as a boundary machine to get past this problem, but I decided to set up a new, physical machine from scratch for my development testing.

Verify that the Windows Phone Emulator Internal Switch Exists

After you install the Windows Phone 8 SDK with the Windows Phone 8 Emulator (WP8E), you will have a new virtual machine in Hyper-V with a name like "Emulator WVGA 512MB"; this is the actual WP8E instance.

You should also have a new virtual switch named "Windows Phone Emulator Internal Switch" in the Hyper-V Virtual Switch Manager; WP8E will use this virtual switch to communicate with your host machine. (This virtual switch was missing on one of the systems where I was doing some testing, so I had to add it manually.)

Disable Windows Firewall on your Host Computer

Since the Hyper-V machine for the Windows Phone 8 Emulator (WP8E) and IIS Express will be communicating over your network, you will need to make sure that the Windows Firewall is not blocking the communication. I attempted to add an exception for IIS Express to the Windows Firewall, but that did not seem to have any effect - I had to actually disable the Windows Firewall on my development machine to get my environment working. (Note that it is entirely possible that I needed to configure something else in my Windows Firewall settings in order to allow my environment to work without disabling Windows Firewall, but I couldn't find it, and it was far easier in the short term to just disable Windows Firewall for the time being.)

Verify the Internal IP Address of your Windows Phone 8 Emulator

It's entirely possible that the Windows Phone 8 Emulator (WP8E) always uses a 169.254.nnn.nnn IP address on the "Windows Phone Emulator Internal Switch", but I needed to make sure which IP address range to use when configuring IIS Express.

The way that I chose to do this was to drop the following code inside my WP8E application and I stepped through it in a debugger to tell me the IP addresses that WP8E had assigned for each network interface:

var hostnames = Windows.Networking.Connectivity.NetworkInformation.GetHostNames();

foreach (var hn in hostnames)

{

if (hn.IPInformation != null)

{

string ipAddress = hn.DisplayName;

}

}

I had two IP addresses for the WP8E: one in the 192.168.nnn.nnn IP address range, and another the 169.254.nnn.nnn IP address range. Once I verified that one of my IP addresses was in the 169.254.nnn.nnn range, I was able to pick an IP address in that range for IIS Express.

Add an Internal IP Address to IIS Express for your Web API Application

Once you have verified the IP address range that the Windows Phone 8 Emulator (WP8E) is using, you can either pick a random IP address within that range to use with IIS Express, or you can use the default IP address for the Windows Phone Emulator Internal Switch. To verify the default IP address, you would need to open a command prompt and run ipconfig, then look for the Internal Ethernet Port Windows Phone Emulator Internal Switch:

C:\>ipconfig

Windows IP Configuration

Ethernet adapter vEthernet (Internal Ethernet Port Windows Phone Emulator

Internal Switch):

Connection-specific DNS Suffix . :

Link-local IPv6 Address . . . . . : fe80::81d3:c1c7:307b:d732%11

IPv4 Address. . . . . . . . . . . : 169.254.80.80

Subnet Mask . . . . . . . . . . . : 255.255.0.0

Default Gateway . . . . . . . . . :

Ethernet adapter vEthernet (Intel(R) 82579LM Gigabit Network Connection

Virtual Switch):

Connection-specific DNS Suffix . :

Link-local IPv6 Address . . . . . : fe80::3569:3387:bb3f:583b%8

IPv4 Address. . . . . . . . . . . : 192.168.1.72

Subnet Mask . . . . . . . . . . . : 255.255.255.0

Default Gateway . . . . . . . . . : 192.168.1.1

C:\> |

If you decide to use a custom IP address in the range that your Windows Phone 8 Emulator is using, you would need to enter that IP address in the IPv4 TCP/IP settings for the Internal Ethernet Port Windows Phone Emulator Internal Switch:

|

| (Click the image to expand it.) |

Once you have the IP address that you intend to use, you will need to add that to your IIS Express settings. There are two easy ways to do this, both of which require administrative privileges on your system:

Method #1: Use AppCmd from an elevated command prompt

Open an elevated command prompt session by right-clicking the Command Prompt icon and choosing Run as administrator, then enter the following commands:

cd "%ProgramFiles%\IIS Express"

appcmd.exe set config -section:system.applicationHost/sites /+"[name='WebApplication1'].bindings.[protocol='http',bindingInformation='169.254.21.12:80:']" /commit:apphost

Where WebApplication1 is the name of your Web API application and 169.254.21.12 is the IP address that you chose for your testing.

Method #2: Manually edit ApplicationHost.config

Open Windows Notepad as an administrator, and open the "ApplicationHost.config" file for IIS Express for editing. By default this file should be located at "%UserProfile%\Documents\IISExpress\config\ApplicationHost.config".

Locate the code for the website of your Web API application; this should resemble something like the following, where WebApplication1 is the name of your Web API application:

<site name="WebApplication1" id="1" serverAutoStart="true">

<application path="/">

<virtualDirectory path="/" physicalPath="%IIS_SITES_HOME%\WebApplication1" />

</application>

<bindings>

<binding protocol="http" bindingInformation=":54321:localhost" />

</bindings>

</site>

Copy the existing binding and paste it below the original entry, then change the binding to resemble the following example, where 169.254.21.12 is the IP address that you chose for your testing:

<site name="WebApplication1" id="1" serverAutoStart="true">

<application path="/">

<virtualDirectory path="/" physicalPath="%IIS_SITES_HOME%\WebApplication1" />

</application>

<bindings>

<binding protocol="http" bindingInformation=":54321:localhost" />

<binding protocol="http" bindingInformation="169.254.21.12:80:" />

</bindings>

</site>

Save the file and close Windows Notepad.

Specify the Internal IP Address of your Web API Application in your Windows Phone 8 Application

Once you have configured IIS Express to use an internal IP address, you need to specify that IP address for your Windows Phone 8 application. The following example shows what this might look like:

public void LoadData()

{

if (this.IsDataLoaded == false)

{

this.Items.Clear();

WebClient webClient = new WebClient();

webClient.Headers["Accept"] = "application/json";

webClient.DownloadStringCompleted += new DownloadStringCompletedEventHandler(webClient_DownloadStringCompleted);

webClient.DownloadStringAsync(new Uri(@"http://169.254.21.12/api/TodoList"));

}

}

Where 169.254.21.12 is the IP address that you chose for your testing.

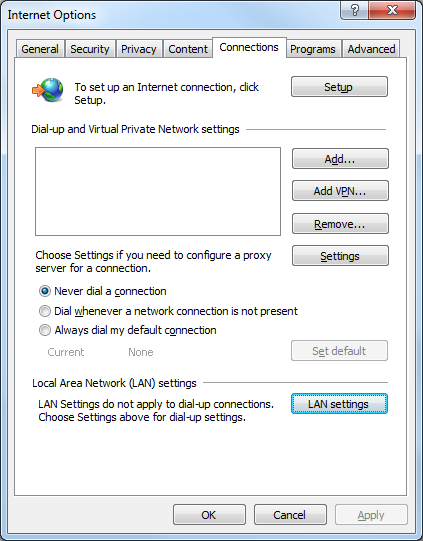

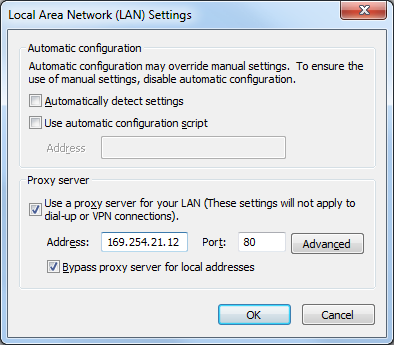

Configure Proxy Settings in your Internet Options to Bypass the Proxy for your Internal IP Address

It is essential that you configure your proxy settings so that the IP address of your Web API application will be considered an internal network address; otherwise all of the requests from the Windows Phone 8 Emulator (WP8E) will attempt to locate your Web API application on the Internet, which will fail.

The WP8E will use the proxy settings from your Windows Internet options, which are the same settings that are used by Internet Explorer. This means that you can either set your proxy settings through the Windows Control Panel by using the Internet Options feature, or you can use Internet Explorer's Tools -> Internet Options menus.

Once you have the Internet Options dialog open, click the Connections tab, then click the LAN settings button.

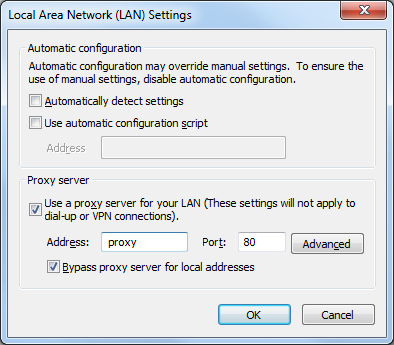

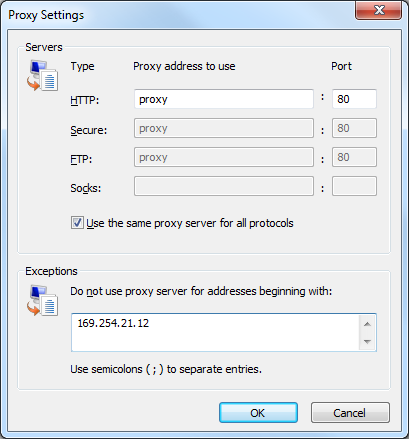

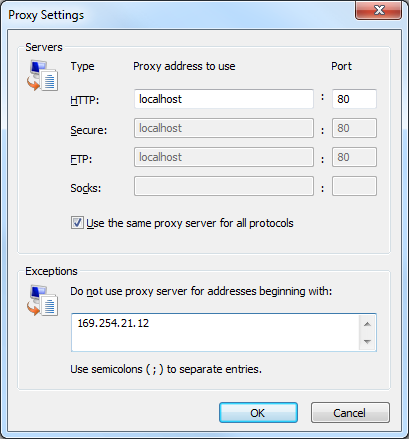

There are a few ways that you can specify your IP address as internal:

Method #1: Specify your proxy server, then click the Advanced button and add your internal IP address to the list of exceptions:

Method #2: If you do not need actual Internet access during your testing, you can specify "localhost" as the proxy server, then click the Advanced button and add your internal IP address to the list of exceptions:

Method #3: Specify your internal IP address as the proxy server; it isn't really a proxy server, of course, but this will keep the requests internal.

Launch IIS Express as an Administrator

In order for IIS Express to register a binding with HTTP.SYS on an IP address other than 127.0.0.1, you need to run IIS Express using an elevated session. There are two easy ways to do this:

Method #1: Launch IIS Express from Visual Studio as an administrator

Start Visual Studio as an administrator by right-clicking the Visual Studio icon and choosing Run as administrator. Once Visual Studio has opened, you can open your Web API project and hit F5 to launch IIS Express.

Method #2: Launch IIS Express from an elevated command prompt

Open an elevated command prompt session by right-clicking the Command Prompt icon and choosing Run as administrator, then enter the following commands:

cd "%ProgramFiles%\IIS Express"

iisexpress.exe /site:WebApplication1

Where WebApplication1 is the name of your Web API application.

In Closing

As I pointed out in my opening statements for this blog, getting the Windows Phone 8 Emulator to communicate with a Web API application on the same computer was not as easy as I would have thought, but all of the steps made sense once I had all of the disparate technologies working together.

Have fun! ;-]

Note: This blog was originally posted at http://blogs.msdn.com/robert_mcmurray/