I had an interesting WebDAV question earlier today that I had not considered before: how can someone create a "Blind Drop Share" using WebDAV? By way of explanation, a Blind Drop Share is a path where users can copy files, but never see the files that are in the directory. You can setup something like this by using NTFS permissions, but that environment can be a little difficult to set up and maintain.

With that in mind, I decided to research a WebDAV-specific solution that didn't require mangling my NTFS permissions. In the end it was pretty easy to achieve, so I thought that it would make a good blog for anyone who wants to do this.

A Little Bit About WebDAV

NTFS permissions contain access controls that configure the directory-listing behavior for files and folders; if you modify those settings, you can control who can see files and folders when they connect to your shared resources. However, there are no built-in features for the WebDAV module which ships with IIS that will approximate the NTFS behavior. But that being said, there is an interesting WebDAV quirk that you can use that will allow you to restrict directory listings, and I will explain how that works.

WebDAV uses the PROPFIND command to retrieve the properties for files and folders, and the WebDAV Redirector will use the response from a PROPFIND command to display a directory listing. (Note: Official WebDAV terminology has no concept of files and folders, those physical objects are respectively called Resources and Collections in WebDAV jargon. But that being said, I will use files and folders throughout this blog post for ease of understanding.)

In any event, one of the HTTP headers that WebDAV uses with the PROPFIND command is the Depth header, which is used to specify how deep the folder/collection traversal should go:

- If you sent a

PROPFIND command for the root of your website with a Depth:0 header/value, you would get the properties for just the root directory - with no files listed; a Depth:0 header/value only retrieves properties for the single resource that you requested.

- If you sent a

PROPFIND command for the root of your website with a Depth:1 header/value, you would get the properties for every file and folder in the root of your website; a Depth:1 header/value retrieves properties for the resource that you requested and all siblings.

- If you sent a

PROPFIND command for the root of your website with a Depth:infinity header/value, you would get the properties for every file and folder in your entire website; a Depth:infinity header/value retrieves properties for every resource regardless of its depth in the hierarchy. (Note that retrieving directory listings with infinite depth are disabled by default in IIS 7 and IIS 8 because it can be CPU intensive.)

By analyzing the above information, it should be obvious that what you need to do is to restrict users to using a Depth:0 header/value. But that's where this scenario gets interesting: if your end-users are using the Windows WebDAV Redirector or other similar technology to map a drive to your HTTP website, you have no control over the value of the Depth header. So how can you restrict that?

In the past I would have written custom native-code HTTP module or ISAPI filter to modify the value of the Depth header; but once you understand how WebDAV works, you can use the URL Rewrite module to modify the headers of incoming HTTP requests to accomplish some pretty cool things - like modifying the values WebDAV-related HTTP headers.

Adding URL Rewrite Rules to Modify the WebDAV Depth Header

Here's how I configured URL Rewrite to set the value of the Depth header to 0, which allowed me to create a "Blind Drop" WebDAV site:

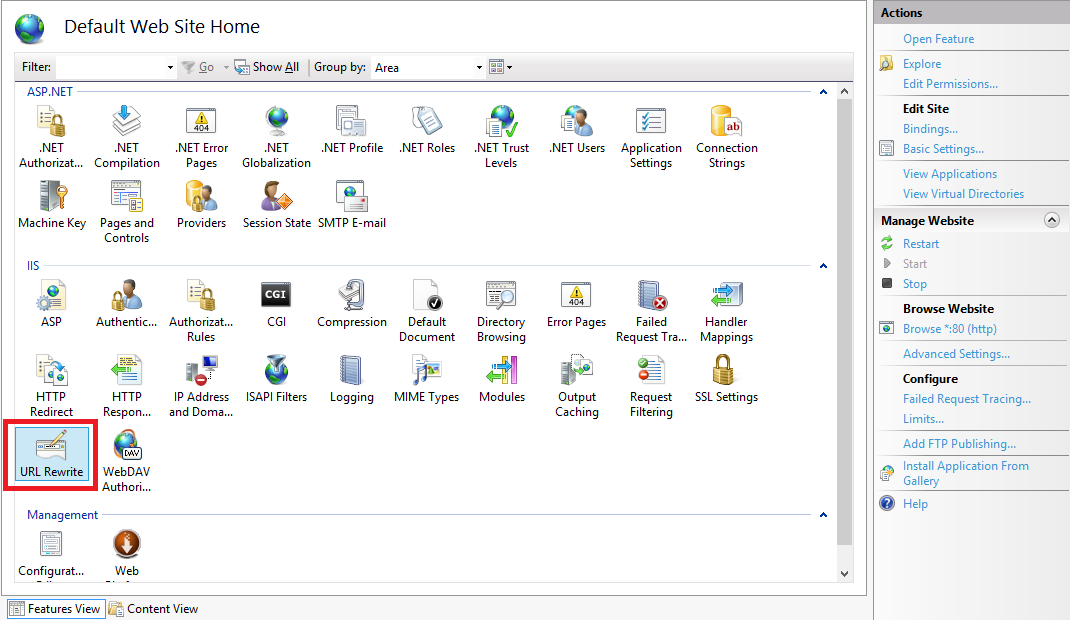

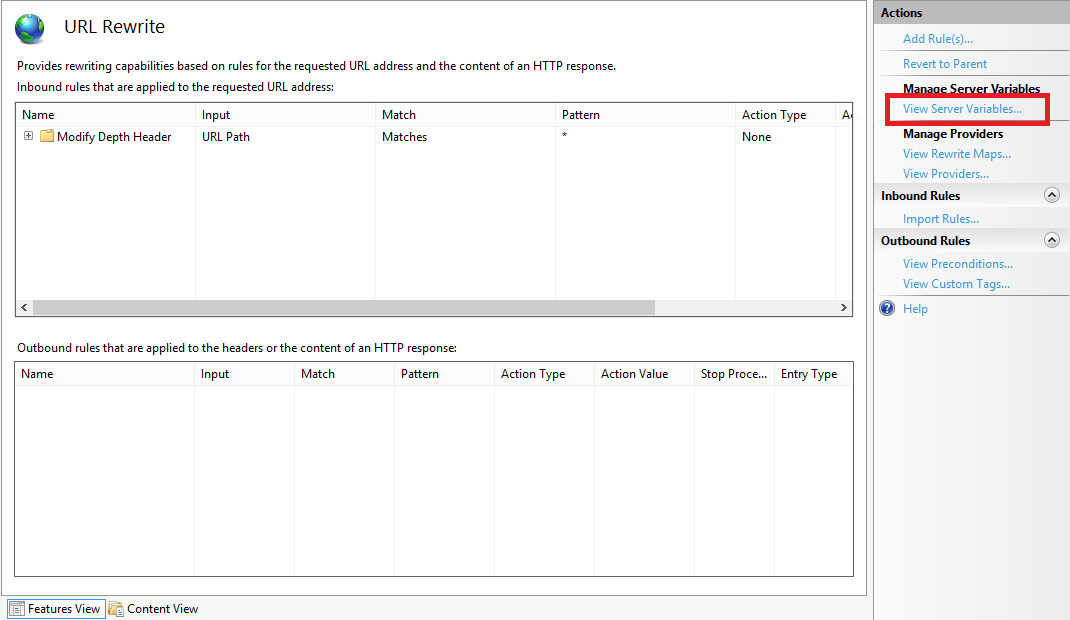

- Open the URL Rewrite feature in IIS Manager for your website.

|

| Click image to expand |

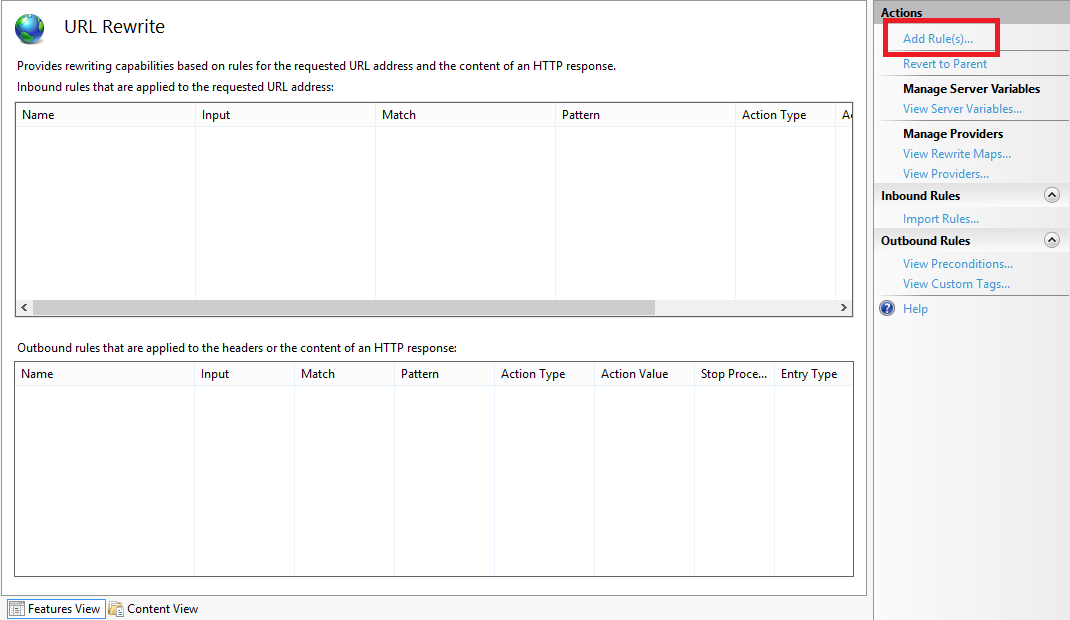

- Click the Add Rules link in the Actionspane.

|

| Click image to expand |

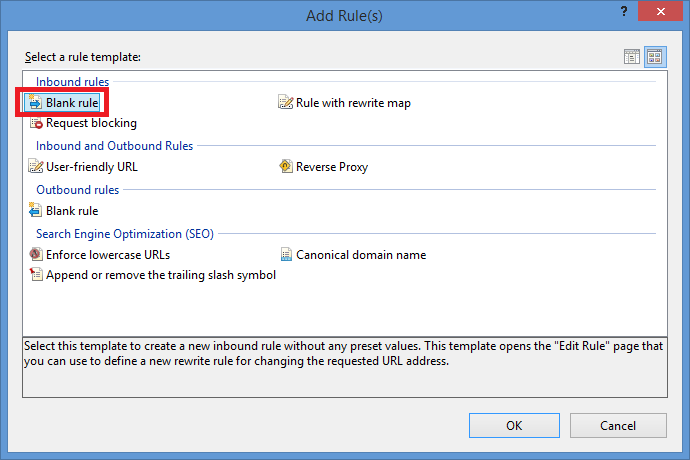

- When the Add Rules dialog box appears, highlight Blank rule and click OK.

|

| Click image to expand |

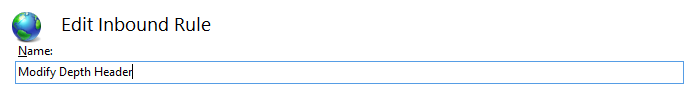

- When the Edit Inbound Rulepage appears, configure the following settings:

- Name the rule "Modify Depth Header".

|

| Click image to expand |

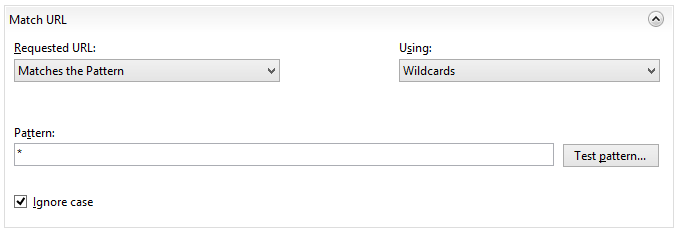

- In the Match URLsection:

- Choose Matches the Pattern in the Requested URL drop-down menu.

- Choose Wildcards in the Using drop-down menu.

- Type a single asterisk "*" in the Pattern text box.

|

| Click image to expand |

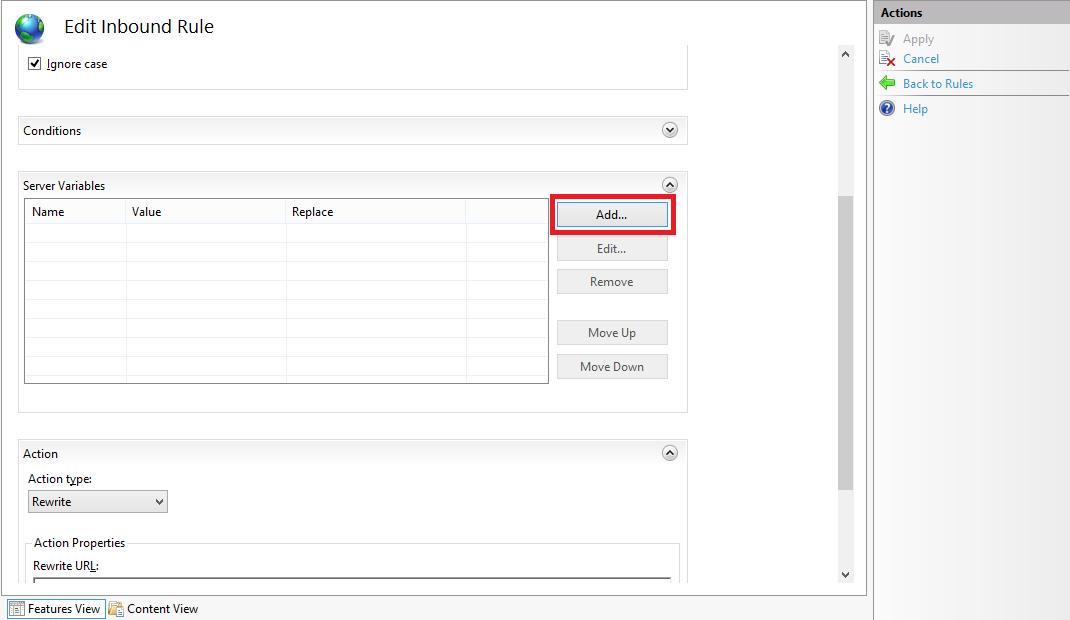

- Expand the Server Variables collection and click Add.

|

| Click image to expand |

- When the Set Server Variabledialog box appears:

- Type "HTTP_DEPTH" in the Server variable name text box.

- Type "0" in the Value text box.

- Make sure that Replace the existing value checkbox is checked.

- Click OK.

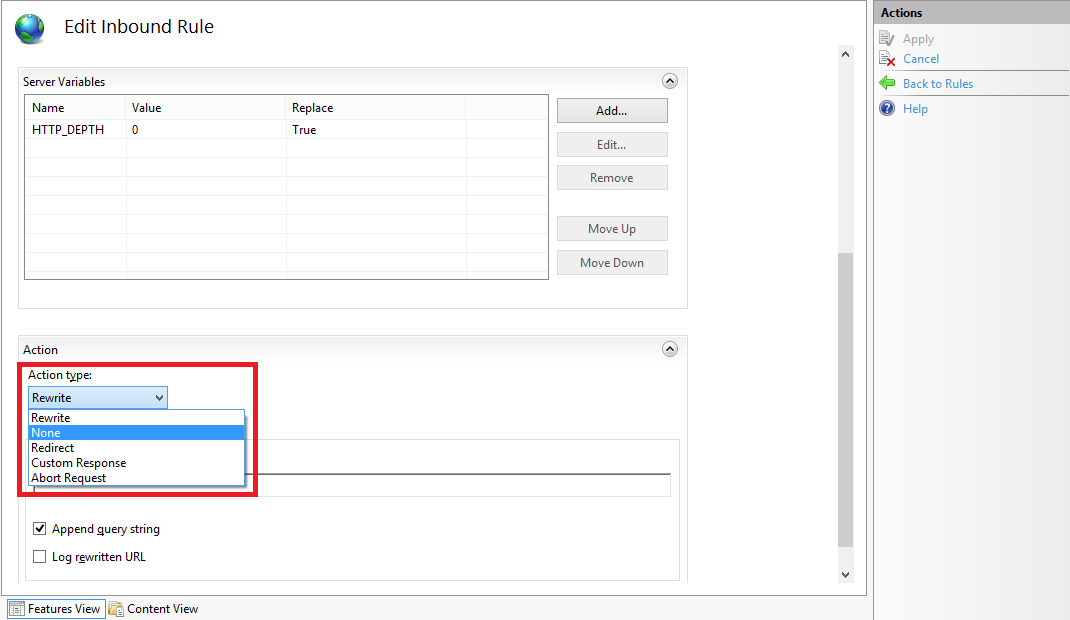

- In the Action group, choose None in the Action typedrop-down menu.

|

| Click image to expand |

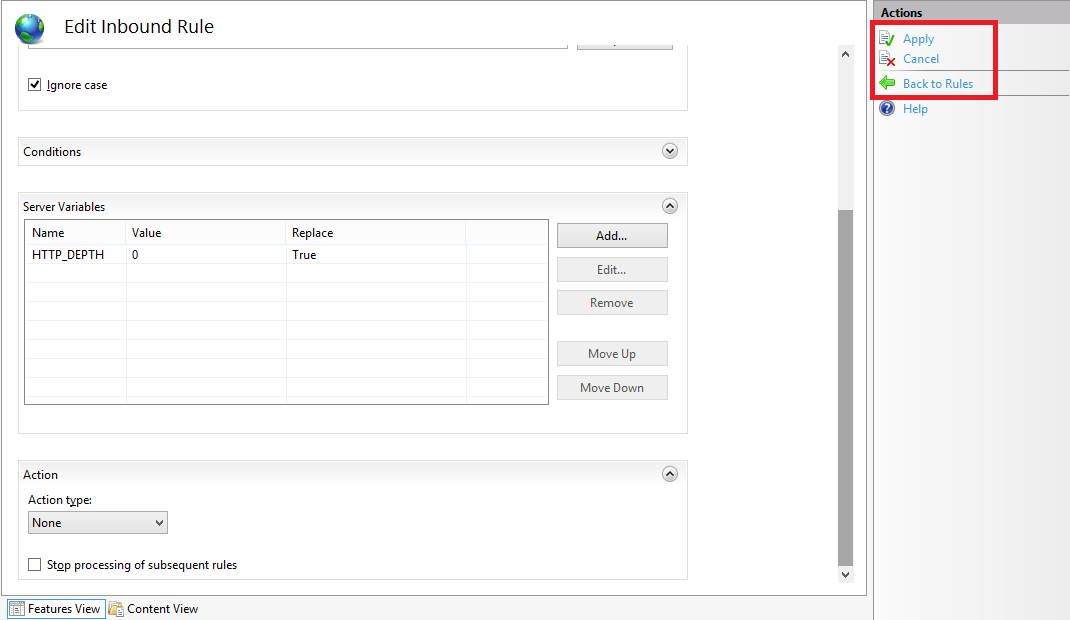

- Click Apply in the Actions pane, and then click Back to Rules.

|

| Click image to expand |

- Click View Server Variables in the Actionspane.

|

| Click image to expand |

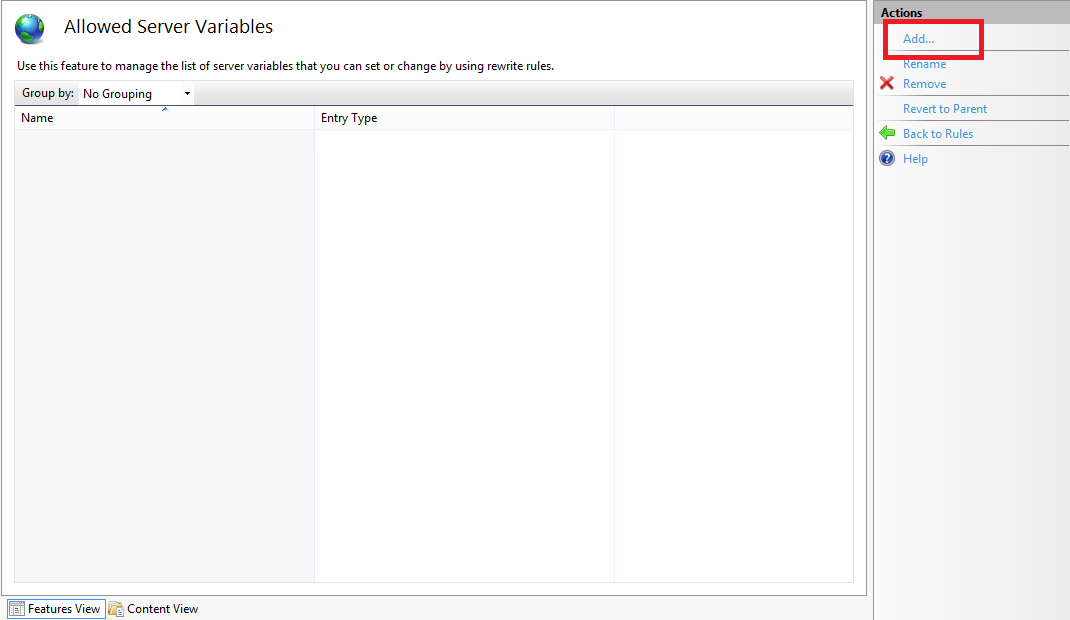

- When the Allowed Server Variablespage appears, configure the following settings:

- Click Add in the Actionspane.

|

| Click image to expand |

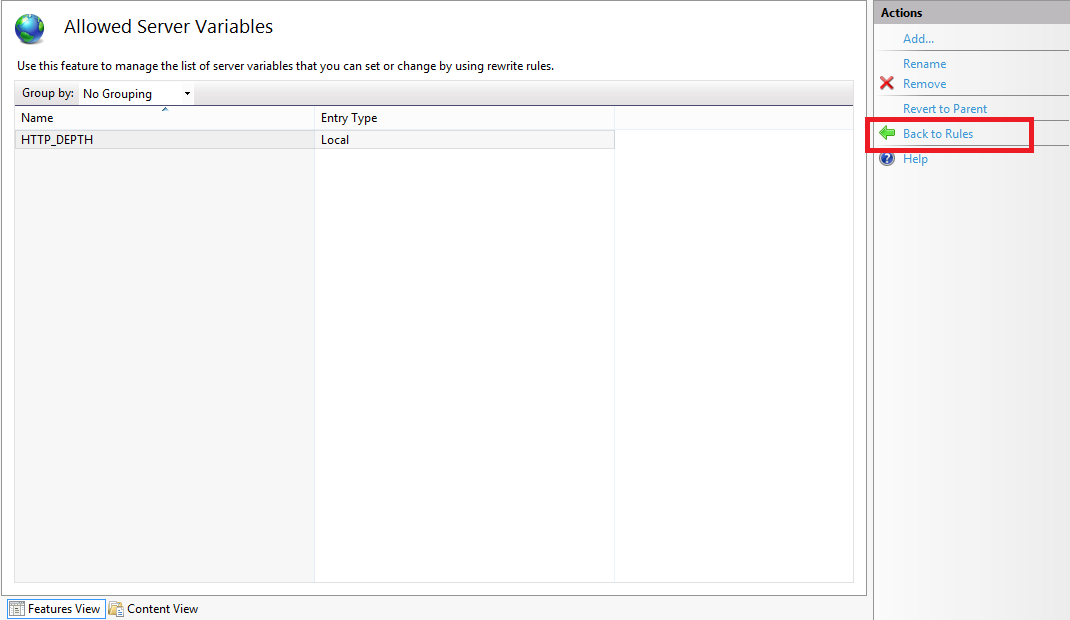

- When the Add Server Variabledialog box appears:

- Type "HTTP_DEPTH" in the Server variable name text box.

- Click OK.

- Click Back to Rules in the Actionspane.

|

| Click image to expand |

If all of these changes were saved to your applicationHost.config file, the resulting XML might resemble the following example - with XML comments added by me to highlight some of the major sections:

<location path="Default Web Site">

<system.webServer>

<-- Start of Security Settings -->

<security>

<authentication>

<anonymousAuthentication enabled="false" />

<basicAuthentication enabled="true" />

</authentication>

<requestFiltering>

<fileExtensions applyToWebDAV="false" />

<verbs applyToWebDAV="false" />

<hiddenSegments applyToWebDAV="false" />

</requestFiltering>

</security>

<-- Start of WebDAV Settings -->

<webdav>

<authoringRules>

<add roles="administrators" path="*" access="Read, Write, Source" />

</authoringRules>

<authoring enabled="true">

<properties allowAnonymousPropfind="false" allowInfinitePropfindDepth="true">

<clear />

<add xmlNamespace="*" propertyStore="webdav_simple_prop" />

</properties>

</authoring>

</webdav>

<-- Start of URL Rewrite Settings -->

<rewrite>

<rules>

<rule name="Modify Depth Header" enabled="true" patternSyntax="Wildcard">

<match url="*" />

<serverVariables>

<set name="HTTP_DEPTH" value="0" />

</serverVariables>

<action type="None" />

</rule>

</rules>

<allowedServerVariables>

<add name="HTTP_DEPTH" />

</allowedServerVariables>

</rewrite>

</system.webServer>

</location>

In all likelihood, some of these settings will be stored in your applicationHost.config file, and the remaining settings will be stored in the web.config file of your website.

Testing the URL Rewrite Settings

If you did not have the URL Rewrite rule in place, or if you disabled the rule, then your web server might respond like the following example if you used the WebDAV Redirector to map a drive to your website from a command prompt:

C:\>net use z: http://www.contoso.com/

Enter the user name for 'www.contoso.com': www.contoso.com\robert

Enter the password for www.contoso.com:

The command completed successfully.

C:\>z:

Z:\>dir

Volume in drive Z has no label.

Volume Serial Number is 0000-0000

Directory of Z:\

09/16/2013 08:55 PM <DIR> .

09/16/2013 08:55 PM <DIR> ..

09/14/2013 12:39 AM <DIR> aspnet_client

09/16/2013 08:06 PM <DIR> scripts

09/16/2013 07:55 PM 66 default.aspx

09/14/2013 12:38 AM 98,757 iis-85.png

09/14/2013 12:38 AM 694 iisstart.htm

09/16/2013 08:55 PM 75 web.config

4 File(s) 99,592 bytes

8 Dir(s) 956,202,631,168 bytes free

Z:\>

However, when you have the URL Rewrite correctly configured and enabled, connecting to the same website will resemble the following example - notice how no files or folders are listed:

C:\>net use z: http://www.contoso.com/

Enter the user name for 'www.contoso.com': www.contoso.com\robert

Enter the password for www.contoso.com:

The command completed successfully.

C:\>z:

Z:\>dir

Volume in drive Z has no label.

Volume Serial Number is 0000-0000

Directory of Z:\

09/16/2013 08:55 PM <DIR> .

09/16/2013 08:55 PM <DIR> ..

0 File(s) 0 bytes

2 Dir(s) 956,202,803,200 bytes free

Z:\>

Despite the blank directory listing, you can still retrieve the properties for any file or folder if you know that it exists. So if you were to use the mapped drive from the preceding example, you could still use an explicit directory command for any object that you had uploaded or created:

Z:\>dir default.aspx

Volume in drive Z has no label.

Volume Serial Number is 0000-0000

Directory of Z:\

09/16/2013 07:55 PM 66 default.aspx

1 File(s) 66 bytes

0 Dir(s) 956,202,799,104 bytes free

Z:\>dir scripts

Volume in drive Z has no label.

Volume Serial Number is 0000-0000

Directory of Z:\scripts

09/16/2013 07:52 PM <DIR> .

09/16/2013 07:52 PM <DIR> ..

0 File(s) 0 bytes

2 Dir(s) 956,202,799,104 bytes free

Z:\>

The same is true for creating directories and files; you can create them, but they will not show up in the directory listings after you have created them unless you reference them explicitly:

Z:\>md foobar

Z:\>dir

Volume in drive Z has no label.

Volume Serial Number is 0000-0000

Directory of Z:\

09/16/2013 11:52 PM <DIR> .

09/16/2013 11:52 PM <DIR> ..

0 File(s) 0 bytes

2 Dir(s) 956,202,618,880 bytes free

Z:\>cd foobar

Z:\foobar>copy NUL foobar.txt

1 file(s) copied.

Z:\foobar>dir

Volume in drive Z has no label.

Volume Serial Number is 0000-0000

Directory of Z:\foobar

09/16/2013 11:52 PM <DIR> .

09/16/2013 11:52 PM <DIR> ..

0 File(s) 0 bytes

2 Dir(s) 956,202,303,488 bytes free

Z:\foobar>dir foobar.txt

Volume in drive Z has no label.

Volume Serial Number is 0000-0000

Directory of Z:\foobar

09/16/2013 11:53 PM 0 foobar.txt

1 File(s) 0 bytes

0 Dir(s) 956,202,299,392 bytes free

Z:\foobar>

That wraps it up for today's post, although I should point out that if you see any errors when you are using the WebDAV Redirector, you should take a look at the Troubleshooting the WebDAV Redirector section of my Using the WebDAV Redirector article; I have done my best to list every error and resolution that I have discovered over the past several years.

Note: This blog was originally posted at http://blogs.msdn.com/robert_mcmurray/