FTP ETW Tracing and IIS 8 - Part 2

09 April 2014 • by Bob • Batch Files, ETW, IIS 8, Log Files, LogParser, Scripting, Troubleshooting, FTP, IIS, IIS 8, LogParser, FTP

Shortly after I published my FTP ETW Tracing and IIS 8 blog post, I was using the batch file from that blog to troubleshoot an issue that I was having with a custom FTP provider. One of the columns which I display in my results is Clock-Time, which is obviously a sequential timestamp that is used to indicate the time and order in which the events occurred.

| (Click the following image to view it full-size.) |

|

At first glance the Clock-Time values might appear to be a range of useless numbers, but I use Clock-Time values quite often when I import the data from my ETW traces into something like Excel and I need to sort the data by the various columns.

That being said, apart from keeping the trace events in order, Clock-Time isn't a very user-friendly value. However, LogParser has some great built-in functions for crunching date/time values, so I decided to update the script to take advantage of some LogParser coolness and reformat the Clock-Time value into a human-readable Date/Time value.

My first order of business was to figure out how to decode the Clock-Time value; since Clock-Time increases for each event, it is obviously an offset from some constant, and after a bit of searching I found that the Clock-Time value is the offset in 100-nanosecond intervals since midnight on January 1, 1601. (Windows uses that value in a lot of places, not just ETW.) Once I had that information, it was pretty easy to come up with a LogParser formula to convert the Clock-Time value into the local time for my system, which is much easier to read.

With that in mind, here is the modified batch file:

@echo off

rem ======================================================================

rem Clean up old log files

for %%a in (ETL CSV) do if exist "%~n0.%%a" del "%~n0.%%a"

echo Starting the ETW session for full FTP tracing...

LogMan.exe start "%~n0" -p "IIS: Ftp Server" 255 5 -ets

echo.

echo Now reproduce your problem.

echo.

echo After you have reproduced your issue, hit any key to close the FTP

echo tracing session. Your trace events will be displayed automatically.

echo.

pause>nul

rem ======================================================================

echo.

echo Closing the ETW session for full FTP tracing...

LogMan.exe stop "%~n0" -ets

rem ======================================================================

echo.

echo Parsing the results - this may take a long time depending on the size of the trace...

if exist "%~n0.etl" (

TraceRpt.exe "%~n0.etl" -o "%~n0.csv" -of CSV

LogParser.exe "SELECT [Clock-Time], TO_LOCALTIME(ADD(TO_TIMESTAMP('1601-01-01 00:00:00', 'yyyy-MM-dd hh:mm:ss'), TO_TIMESTAMP(DIV([Clock-Time],10000000)))) AS [Date/Time], [Event Name], Type, [User Data] FROM '%~n0.csv'" -i:csv -e 2 -o:DATAGRID -rtp 20

)

When you run this new batch file, it will display an additional "Date/Time" column with a more-informative value in local time for the sever where you captured the trace.

| (Click the following image to view it full-size.) |

|

The new Date/Time column is considerably more practical, so I'll probably keep it in the batch file that I use when I am troubleshooting. You will also notice that I kept the original Clock-Time column; I chose to do so because I will undoubtedly continue to use that column for sorting when I import the data into something else, but you can safely remove that column if you would prefer to use only the new Date/Time value.

That wraps it up for today's post. :-)

Note: This blog was originally posted at http://blogs.msdn.com/robert_mcmurray/

FTP ETW Tracing and IIS 8 - Part 2

09 April 2014 • by Bob • Batch Files, ETW, IIS 8, Log Files, LogParser, Scripting, Troubleshooting, FTP, IIS, IIS 8, LogParser, FTP

Shortly after I published my FTP ETW Tracing and IIS 8 blog post, I was using the batch file from that blog to troubleshoot an issue that I was having with a custom FTP provider. One of the columns which I display in my results is Clock-Time, which is obviously a sequential timestamp that is used to indicate the time and order in which the events occurred.

| (Click the following image to view it full-size.) |

|

At first glance the Clock-Time values might appear to be a range of useless numbers, but I use Clock-Time values quite often when I import the data from my ETW traces into something like Excel and I need to sort the data by the various columns.

That being said, apart from keeping the trace events in order, Clock-Time isn't a very user-friendly value. However, LogParser has some great built-in functions for crunching date/time values, so I decided to update the script to take advantage of some LogParser coolness and reformat the Clock-Time value into a human-readable Date/Time value.

My first order of business was to figure out how to decode the Clock-Time value; since Clock-Time increases for each event, it is obviously an offset from some constant, and after a bit of searching I found that the Clock-Time value is the offset in 100-nanosecond intervals since midnight on January 1, 1601. (Windows uses that value in a lot of places, not just ETW.) Once I had that information, it was pretty easy to come up with a LogParser formula to convert the Clock-Time value into the local time for my system, which is much easier to read.

With that in mind, here is the modified batch file:

@echo off

rem ======================================================================

rem Clean up old log files

for %%a in (ETL CSV) do if exist "%~n0.%%a" del "%~n0.%%a"

echo Starting the ETW session for full FTP tracing...

LogMan.exe start "%~n0" -p "IIS: Ftp Server" 255 5 -ets

echo.

echo Now reproduce your problem.

echo.

echo After you have reproduced your issue, hit any key to close the FTP

echo tracing session. Your trace events will be displayed automatically.

echo.

pause>nul

rem ======================================================================

echo.

echo Closing the ETW session for full FTP tracing...

LogMan.exe stop "%~n0" -ets

rem ======================================================================

echo.

echo Parsing the results - this may take a long time depending on the size of the trace...

if exist "%~n0.etl" (

TraceRpt.exe "%~n0.etl" -o "%~n0.csv" -of CSV

LogParser.exe "SELECT [Clock-Time], TO_LOCALTIME(ADD(TO_TIMESTAMP('1601-01-01 00:00:00', 'yyyy-MM-dd hh:mm:ss'), TO_TIMESTAMP(DIV([Clock-Time],10000000)))) AS [Date/Time], [Event Name], Type, [User Data] FROM '%~n0.csv'" -i:csv -e 2 -o:DATAGRID -rtp 20

)

When you run this new batch file, it will display an additional "Date/Time" column with a more-informative value in local time for the sever where you captured the trace.

| (Click the following image to view it full-size.) |

|

The new Date/Time column is considerably more practical, so I'll probably keep it in the batch file that I use when I am troubleshooting. You will also notice that I kept the original Clock-Time column; I chose to do so because I will undoubtedly continue to use that column for sorting when I import the data into something else, but you can safely remove that column if you would prefer to use only the new Date/Time value.

That wraps it up for today's post. :-)

Note: This blog was originally posted at http://blogs.msdn.com/robert_mcmurray/

FTP ETW Tracing and IIS 8

08 April 2014 • by Bob • FTP, IIS, IIS 8, LogParser, IIS, IIS 8, LogParser, ETW, Troubleshooting, Scripting, Batch Files, Log Files

In the past I have written a couple of blogs about using the FTP service's Event Tracing for Windows (ETW) features to troubleshoot issues; see FTP and ETW Tracing and Troubleshooting Custom FTP Providers with ETW for details. Those blog posts contain batch files which use the built-in Windows LogMan utility to capture an ETW trace, and they use downloadable LogParser utility to parse the results into human-readable form. I use the batch files from those blogs quite often, and I tend to use them a lot when I am developing custom FTP providers which add new functionality to my FTP servers.

Unfortunately, sometime around the release of Windows 8 and Windows Server 2012 I discovered that the ETW format had changed, and the current version of LogParser (version 2.2) cannot read the new ETW files. When you try to use the batch files from my blog with IIS 8, you see the following errors:

Verifying that LogParser.exe is in the path... Done. Starting the ETW session for full FTP tracing... The command completed successfully. Now reproduce your problem. After you have reproduced your issue, hit any key to close the FTP tracing session. Your trace events will be displayed automatically. Closing the ETW session for full FTP tracing... The command completed successfully. Parsing the results - this may take a long time depending on the size of the trace... Task aborted. Cannot open <from-entity>: Trace file "C:\temp\ftp.etl" has been created on a OS version (6.3) that is not compatible with the current OS version Statistics: ----------- Elements processed: 0 Elements output: 0 Execution time: 0.06 seconds

I meant to research a workaround at the time, but one thing led to another and I simply forgot about doing so. But I needed to use ETW the other day when I was developing something, so that seemed like a good time to quit slacking and come up with an answer. :-)

With that in mind, I came up with a very easy workaround, which I will present here. Once again, this batch file has a requirement on LogParser being installed on your system, but for the sake of brevity I have removed the lines from this version of the batch file which check for LogParser. (You can copy those lines from my previous blog posts if you want that functionality restored.)

Here's the way that this workaround is implemented: instead of creating an ETW log and then parsing it directly with LogParser, this new batch file invokes the built-in Windows TraceRpt command to parse the ETW file and save the results as a CSV file, which is then read by LogParser to view the results in a datagrid like the batch files in my previous blogs:

@echo off rem ====================================================================== rem Clean up old log files for %%a in (ETL CSV) do if exist "%~n0.%%a" del "%~n0.%%a" echo Starting the ETW session for full FTP tracing... LogMan.exe start "%~n0" -p "IIS: Ftp Server" 255 5 -ets echo. echo Now reproduce your problem. echo. echo After you have reproduced your issue, hit any key to close the FTP echo tracing session. Your trace events will be displayed automatically. echo. pause>nul rem ====================================================================== echo. echo Closing the ETW session for full FTP tracing... LogMan.exe stop "%~n0" -ets rem ====================================================================== echo. echo Parsing the results - this may take a long time depending on the size of the trace... if exist "%~n0.etl" ( TraceRpt.exe "%~n0.etl" -o "%~n0.csv" -of CSV LogParser.exe "SELECT [Clock-Time], [Event Name], Type, [User Data] FROM '%~n0.csv'" -i:csv -e 2 -o:DATAGRID -rtp 20 )

Here's another great thing about this new batch file - it will also work down-level on Windows 7 and Windows Server 2008; so if you have been using my previous batch files with IIS 7 - you can simply replace your old batch file with this new version. You will see a few differences between the results from my old batch files and this new version, namely that I included a couple of extra columns that I like to use for troubleshooting.

| (Click the following image to view it full-size.) |

|

There is one last thing which I would like to mention in closing: I realize that it would be much easier on everyone if Microsoft simply released a new version of LogParser which works with the new ETW format, but unfortunately there are no plans at the moment to release a new version of LogParser. And trust me - I'm just as depressed about that fact as anyone else. :-(

Note: This blog was originally posted at http://blogs.msdn.com/robert_mcmurray/

FTP ETW Tracing and IIS 8

08 April 2014 • by Bob • FTP, IIS, IIS 8, LogParser, IIS, IIS 8, LogParser, ETW, Troubleshooting, Scripting, Batch Files, Log Files

In the past I have written a couple of blogs about using the FTP service's Event Tracing for Windows (ETW) features to troubleshoot issues; see FTP and ETW Tracing and Troubleshooting Custom FTP Providers with ETW for details. Those blog posts contain batch files which use the built-in Windows LogMan utility to capture an ETW trace, and they use downloadable LogParser utility to parse the results into human-readable form. I use the batch files from those blogs quite often, and I tend to use them a lot when I am developing custom FTP providers which add new functionality to my FTP servers.

Unfortunately, sometime around the release of Windows 8 and Windows Server 2012 I discovered that the ETW format had changed, and the current version of LogParser (version 2.2) cannot read the new ETW files. When you try to use the batch files from my blog with IIS 8, you see the following errors:

Verifying that LogParser.exe is in the path... Done. Starting the ETW session for full FTP tracing... The command completed successfully. Now reproduce your problem. After you have reproduced your issue, hit any key to close the FTP tracing session. Your trace events will be displayed automatically. Closing the ETW session for full FTP tracing... The command completed successfully. Parsing the results - this may take a long time depending on the size of the trace... Task aborted. Cannot open <from-entity>: Trace file "C:\temp\ftp.etl" has been created on a OS version (6.3) that is not compatible with the current OS version Statistics: ----------- Elements processed: 0 Elements output: 0 Execution time: 0.06 seconds

I meant to research a workaround at the time, but one thing led to another and I simply forgot about doing so. But I needed to use ETW the other day when I was developing something, so that seemed like a good time to quit slacking and come up with an answer. :-)

With that in mind, I came up with a very easy workaround, which I will present here. Once again, this batch file has a requirement on LogParser being installed on your system, but for the sake of brevity I have removed the lines from this version of the batch file which check for LogParser. (You can copy those lines from my previous blog posts if you want that functionality restored.)

Here's the way that this workaround is implemented: instead of creating an ETW log and then parsing it directly with LogParser, this new batch file invokes the built-in Windows TraceRpt command to parse the ETW file and save the results as a CSV file, which is then read by LogParser to view the results in a datagrid like the batch files in my previous blogs:

@echo off rem ====================================================================== rem Clean up old log files for %%a in (ETL CSV) do if exist "%~n0.%%a" del "%~n0.%%a" echo Starting the ETW session for full FTP tracing... LogMan.exe start "%~n0" -p "IIS: Ftp Server" 255 5 -ets echo. echo Now reproduce your problem. echo. echo After you have reproduced your issue, hit any key to close the FTP echo tracing session. Your trace events will be displayed automatically. echo. pause>nul rem ====================================================================== echo. echo Closing the ETW session for full FTP tracing... LogMan.exe stop "%~n0" -ets rem ====================================================================== echo. echo Parsing the results - this may take a long time depending on the size of the trace... if exist "%~n0.etl" ( TraceRpt.exe "%~n0.etl" -o "%~n0.csv" -of CSV LogParser.exe "SELECT [Clock-Time], [Event Name], Type, [User Data] FROM '%~n0.csv'" -i:csv -e 2 -o:DATAGRID -rtp 20 )

Here's another great thing about this new batch file - it will also work down-level on Windows 7 and Windows Server 2008; so if you have been using my previous batch files with IIS 7 - you can simply replace your old batch file with this new version. You will see a few differences between the results from my old batch files and this new version, namely that I included a couple of extra columns that I like to use for troubleshooting.

| (Click the following image to view it full-size.) |

|

There is one last thing which I would like to mention in closing: I realize that it would be much easier on everyone if Microsoft simply released a new version of LogParser which works with the new ETW format, but unfortunately there are no plans at the moment to release a new version of LogParser. And trust me - I'm just as depressed about that fact as anyone else. :-(

Note: This blog was originally posted at http://blogs.msdn.com/robert_mcmurray/

Advanced Log Parser Part 7 - Creating a Generic Input Format Plug-In

28 February 2013 • by Bob • LogParser, Scripting, XML

In Part 6 of this series, I showed how to create a very basic COM-based input format provider for Log Parser. I wrote that blog post as a follow-up to an earlier blog post where I had written a more complex COM-based input format provider for Log Parser that worked with FTP RSCA events. My original blog post had resulted in several requests for me to write some easier examples about how to get started writing COM-based input format providers for Log Parser, and those appeals led me to write my last blog post:

Advanced Log Parser Part 6 - Creating a Simple Custom Input Format Plug-In

The example in that blog post simply returns static data, which was the easiest example that I could demonstrate.

For this follow-up blog post, I will illustrate how to create a simple COM-based input format plug-in for Log Parser that you can use as a generic provider for consuming data in text-based log files. Please bear in mind that this is just an example to help developers get started writing their own COM-based input format providers; you might be able to accomplish some of what I will demonstrate in this blog post by using the built-in Log Parser functionality. That being said, this still seems like the best example to help developers get started because consuming data in text-based log files was the most-often-requested example that I received.

In Review: Creating COM-based plug-ins for Log Parser

In my earlier blog posts, I mentioned that a COM plug-in has to support several public methods. You can look at those blog posts when you get the chance, but it is a worthwhile endeavor for me to copy the following information from those blog posts since it is essential to understanding how the code sample in this blog post is supposed to work.

| Method Name | Description |

|---|---|

| OpenInput | Opens your data source and sets up any initial environment settings. |

| GetFieldCount | Returns the number of fields that your plug-in will provide. |

| GetFieldName | Returns the name of a specified field. |

| GetFieldType | Returns the datatype of a specified field. |

| GetValue | Returns the value of a specified field. |

| ReadRecord | Reads the next record from your data source. |

| CloseInput | Closes your data source and cleans up any environment settings. |

Once you have created and registered a COM-based input format plug-in, you call it from Log Parser by using something like the following syntax:

logparser.exe "SELECT * FROM FOO" -i:COM -iProgID:BAR

In the preceding example, FOO is a data source that makes sense to your plug-in, and BAR is the COM class name for your plug-in.

Creating a Generic COM plug-in for Log Parser

As I have done in my previous two blog posts about creating COM-based input format plug-ins, I'm going to demonstrate how to create a COM component by using a scriptlet since no compilation is required. This generic plug-in will parse any text-based log files where records are delimited by CRLF sequences and fields/columns are delimited by a separator that is defined as a constant in the code sample.

To create the sample COM plug-in, copy the following code into a text file, and save that file as "Generic.LogParser.Scriptlet.sct" to your computer. (Note: The *.SCT file extension tells Windows that this is a scriptlet file.)

<SCRIPTLET> <registration Description="Simple Log Parser Scriptlet" Progid="Generic.LogParser.Scriptlet" Classid="{4e616d65-6f6e-6d65-6973-526f62657274}" Version="1.00" Remotable="False" /> <comment> EXAMPLE: logparser "SELECT * FROM 'C:\foo\bar.log'" -i:COM -iProgID:Generic.LogParser.Scriptlet </comment> <implements id="Automation" type="Automation"> <method name="OpenInput"> <parameter name="strFileName"/> </method> <method name="GetFieldCount" /> <method name="GetFieldName"> <parameter name="intFieldIndex"/> </method> <method name="GetFieldType"> <parameter name="intFieldIndex"/> </method> <method name="ReadRecord" /> <method name="GetValue"> <parameter name="intFieldIndex"/> </method> <method name="CloseInput"> <parameter name="blnAbort"/> </method> </implements> <SCRIPT LANGUAGE="VBScript"> Option Explicit ' Define the column separator in the log file. Const strSeparator = "|" ' Define whether the first row contains column names. Const blnHeaderRow = True ' Define the field type constants. Const TYPE_INTEGER = 1 Const TYPE_REAL = 2 Const TYPE_STRING = 3 Const TYPE_TIMESTAMP = 4 Const TYPE_NULL = 5 ' Declare variables. Dim objFSO, objFile, blnFileOpen Dim arrFieldNames, arrFieldTypes Dim arrCurrentRecord ' Indicate that no file has been opened. blnFileOpen = False ' -------------------------------------------------------------------------------- ' Open the input session. ' -------------------------------------------------------------------------------- Public Function OpenInput(strFileName) Dim tmpCount ' Test for a file name. If Len(strFileName)=0 Then ' Return a status that the parameter is incorrect. OpenInput = 87 blnFileOpen = False Else ' Test for single-quotes. If Left(strFileName,1)="'" And Right(strFileName,1)="'" Then ' Strip the single-quotes from the file name. strFileName = Mid(strFileName,2,Len(strFileName)-2) End If ' Open the file system object. Set objFSO = CreateObject("Scripting.Filesystemobject") ' Verify that the specified file exists. If objFSO.FileExists(strFileName) Then ' Open the specified file. Set objFile = objFSO.OpenTextFile(strFileName,1,False) ' Set a flag to indicate that the specified file is open. blnFileOpen = true ' Retrieve an initial record. Call ReadRecord() ' Redimension the array of field names. ReDim arrFieldNames(UBound(arrCurrentRecord)) ' Loop through the record fields. For tmpCount = 0 To (UBound(arrFieldNames)) ' Test for a header row. If blnHeaderRow = True Then arrFieldNames(tmpCount) = arrCurrentRecord(tmpCount) Else arrFieldNames(tmpCount) = "Field" & (tmpCount+1) End If Next ' Test for a header row. If blnHeaderRow = True Then ' Retrieve a second record. Call ReadRecord() End If ' Redimension the array of field types. ReDim arrFieldTypes(UBound(arrCurrentRecord)) ' Loop through the record fields. For tmpCount = 0 To (UBound(arrFieldTypes)) ' Test if the current field contains a date. If IsDate(arrCurrentRecord(tmpCount)) Then ' Specify the field type as a timestamp. arrFieldTypes(tmpCount) = TYPE_TIMESTAMP ' Test if the current field contains a number. ElseIf IsNumeric(arrCurrentRecord(tmpCount)) Then ' Test if the current field contains a decimal. If InStr(arrCurrentRecord(tmpCount),".") Then ' Specify the field type as a real number. arrFieldTypes(tmpCount) = TYPE_REAL Else ' Specify the field type as an integer. arrFieldTypes(tmpCount) = TYPE_INTEGER End If ' Test if the current field is null. ElseIf IsNull(arrCurrentRecord(tmpCount)) Then ' Specify the field type as NULL. arrFieldTypes(tmpCount) = TYPE_NULL ' Test if the current field is empty. ElseIf IsEmpty(arrCurrentRecord(tmpCount)) Then ' Specify the field type as NULL. arrFieldTypes(tmpCount) = TYPE_NULL ' Otherwise, assume it's a string. Else ' Specify the field type as a string. arrFieldTypes(tmpCount) = TYPE_STRING End If Next ' Temporarily close the log file. objFile.Close ' Re-open the specified file. Set objFile = objFSO.OpenTextFile(strFileName,1,False) ' Test for a header row. If blnHeaderRow = True Then ' Skip the first row. objFile.SkipLine End If ' Return success status. OpenInput = 0 Else ' Return a file not found status. OpenInput = 2 End If End If End Function ' -------------------------------------------------------------------------------- ' Close the input session. ' -------------------------------------------------------------------------------- Public Function CloseInput(blnAbort) ' Free the objects. Set objFile = Nothing Set objFSO = Nothing ' Set a flag to indicate that the specified file is closed. blnFileOpen = False End Function ' -------------------------------------------------------------------------------- ' Return the count of fields. ' -------------------------------------------------------------------------------- Public Function GetFieldCount() ' Specify the default value. GetFieldCount = 0 ' Test if a file is open. If (blnFileOpen = True) Then ' Test for the number of field names. If UBound(arrFieldNames) > 0 Then ' Return the count of fields. GetFieldCount = UBound(arrFieldNames) + 1 End If End If End Function ' -------------------------------------------------------------------------------- ' Return the specified field's name. ' -------------------------------------------------------------------------------- Public Function GetFieldName(intFieldIndex) ' Specify the default value. GetFieldName = Null ' Test if a file is open. If (blnFileOpen = True) Then ' Test if the index is valid. If intFieldIndex<=UBound(arrFieldNames) Then ' Return the specified field name. GetFieldName = arrFieldNames(intFieldIndex) End If End If End Function ' -------------------------------------------------------------------------------- ' Return the specified field's type. ' -------------------------------------------------------------------------------- Public Function GetFieldType(intFieldIndex) ' Specify the default value. GetFieldType = Null ' Test if a file is open. If (blnFileOpen = True) Then ' Test if the index is valid. If intFieldIndex<=UBound(arrFieldTypes) Then ' Return the specified field type. GetFieldType = arrFieldTypes(intFieldIndex) End If End If End Function ' -------------------------------------------------------------------------------- ' Return the specified field's value. ' -------------------------------------------------------------------------------- Public Function GetValue(intFieldIndex) ' Specify the default value. GetValue = Null ' Test if a file is open. If (blnFileOpen = True) Then ' Test if the index is valid. If intFieldIndex<=UBound(arrCurrentRecord) Then ' Return the specified field value based on the field type. Select Case arrFieldTypes(intFieldIndex) Case TYPE_INTEGER: GetValue = CInt(arrCurrentRecord(intFieldIndex)) Case TYPE_REAL: GetValue = CDbl(arrCurrentRecord(intFieldIndex)) Case TYPE_STRING: GetValue = CStr(arrCurrentRecord(intFieldIndex)) Case TYPE_TIMESTAMP: GetValue = CDate(arrCurrentRecord(intFieldIndex)) Case Else GetValue = Null End Select End If End If End Function ' -------------------------------------------------------------------------------- ' Read the next record, and return true or false if there is more data. ' -------------------------------------------------------------------------------- Public Function ReadRecord() ' Specify the default value. ReadRecord = False ' Test if a file is open. If (blnFileOpen = True) Then ' Test if there is more data. If objFile.AtEndOfStream Then ' Flag the log file as having no more data. ReadRecord = False Else ' Read the current record. arrCurrentRecord = Split(objFile.ReadLine,strSeparator) ' Flag the log file as having more data to process. ReadRecord = True End If End If End Function </SCRIPT> </SCRIPTLET>

After you have saved the scriptlet code to your computer, you register it by using the following syntax:

regsvr32 Generic.LogParser.Scriptlet.sct

At the very minimum, you can now use the COM plug-in with Log Parser by using syntax like the following:

logparser "SELECT * FROM 'C:\Foo\Bar.log'" -i:COM -iProgID:Generic.LogParser.Scriptlet

Next, let's analyze what this sample does.

Examining the Generic Scriptlet in Detail

Here are the different parts of the scriptlet and what they do:

- The <registration> section of the scriptlet sets up the COM registration information; you'll notice the COM component class name and GUID, as well as version information and a general description. (Note that you should generate your own GUID for each scriptlet that you create.)

- The <implements> section declares the public methods that the COM plug-in has to support.

- The <script>section contains the actual implementation:

- The first part of the script section declares the global variables that will be used:

- The strSeparator constant defines the delimiter that is used to separate the data between fields/columns in a text-based log file.

- The blnHeaderRow constant defines whether the first row in a text-based log file contains the names of the fields/columns:

- If set to True, the plug-in will use the data in the first line of the log file to name the fields/columns.

- If set to False, the plug-in will define generic field/column names like "Field1", "Field2", etc.

- The second part of the script contains the required methods:

- The OpenInput() method performs several tasks:

- Locates and opens the log file that you specify in your SQL statement, or returns an error if the log file cannot be found.

- Determines the number, names, and data types of fields/columns in the log file.

- The CloseInput() method cleans up the session by closing the log file and destroying objects.

- The GetFieldCount() method returns the number of fields/columns in the log file.

- The GetFieldName() method returns the name of a field/column in the log file.

- The GetFieldType() method returns the data type of a field/column in the log file. As a reminder, Log Parser supports the following five data types for COM plug-ins: TYPE_INTEGER, TYPE_REAL, TYPE_STRING, TYPE_TIMESTAMP, and TYPE_NULL.

- The GetValue() method returns the data value of a field/column in the log file.

- The ReadRecord() method moves to the next line in the log file. This method returns True if there is additional data to read, or False when the end of data is reached.

- The OpenInput() method performs several tasks:

- The first part of the script section declares the global variables that will be used:

Next, let's look at how to use the sample.

Using the Generic Scriptlet with Log Parser

As a sample log file for this blog, I'm going to use the data in the Sample XML File (books.xml) from MSDN. By running a quick Log Parser query that I will show later, I was able to export data from the XML file into text file named "books.log" that represents an example of a simple log file format that I have had to work with in the past:

id|publish_date|author|title|price

bk101|2000-10-01|Gambardella, Matthew|XML Developer's Guide|44.950000

bk102|2000-12-16|Ralls, Kim|Midnight Rain|5.950000

bk103|2000-11-17|Corets, Eva|Maeve Ascendant|5.950000

bk104|2001-03-10|Corets, Eva|Oberon's Legacy|5.950000

bk105|2001-09-10|Corets, Eva|The Sundered Grail|5.950000

bk106|2000-09-02|Randall, Cynthia|Lover Birds|4.950000

bk107|2000-11-02|Thurman, Paula|Splish Splash|4.950000

bk108|2000-12-06|Knorr, Stefan|Creepy Crawlies|4.950000

bk109|2000-11-02|Kress, Peter|Paradox Lost|6.950000

bk110|2000-12-09|O'Brien, Tim|Microsoft .NET: The Programming Bible|36.950000

bk111|2000-12-01|O'Brien, Tim|MSXML3: A Comprehensive Guide|36.950000

bk112|2001-04-16|Galos, Mike|Visual Studio 7: A Comprehensive Guide|49.950000

In this example, the data is pretty easy to understand - the first row contains the list of field/column names, and the fields/columns are separated by the pipe ("|") character throughout the log file. That being said, you could easily change my sample code to use a different delimiter that your custom log files use.

With that in mind, let's look at some Log Parser examples.

Example #1: Retrieving Data from a Custom Log

The first thing that you should try is to simply retrieve data from your custom plug-in, and the following query should serve as an example:

logparser "SELECT * FROM 'C:\sample\books.log'" -i:COM -iProgID:Generic.LogParser.Scriptlet

The above query will return results like the following:

| id | publish_date | author | title | price | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ----- | ------------------ | -------------------- | ------------------------------------- | --------- | ||||||||||

| bk101 | 10/1/2000 0:00:00 | Gambardella, Matthew | XML Developer's Guide | 44.950000 | ||||||||||

| bk102 | 12/16/2000 0:00:00 | Ralls, Kim | Midnight Rain | 5.950000 | ||||||||||

| bk103 | 11/17/2000 0:00:00 | Corets, Eva | Maeve Ascendant | 5.950000 | ||||||||||

| bk104 | 3/10/2001 0:00:00 | Corets, Eva | Oberon's Legacy | 5.950000 | ||||||||||

| bk105 | 9/10/2001 0:00:00 | Corets, Eva | The Sundered Grail | 5.950000 | ||||||||||

| bk106 | 9/2/2000 0:00:00 | Randall, Cynthia | Lover Birds | 4.950000 | ||||||||||

| bk107 | 11/2/2000 0:00:00 | Thurman, Paula | Splish Splash | 4.950000 | ||||||||||

| bk108 | 12/6/2000 0:00:00 | Knorr, Stefan | Creepy Crawlies | 4.950000 | ||||||||||

| bk109 | 11/2/2000 0:00:00 | Kress, Peter | Paradox Lost | 6.950000 | ||||||||||

| bk110 | 12/9/2000 0:00:00 | O'Brien, Tim | Microsoft .NET: The Programming Bible | 36.950000 | ||||||||||

| bk111 | 12/1/2000 0:00:00 | O'Brien, Tim | MSXML3: A Comprehensive Guide | 36.950000 | ||||||||||

| bk112 | 4/16/2001 0:00:00 | Galos, Mike | Visual Studio 7: A Comprehensive Guide | 49.950000 | ||||||||||

|

||||||||||||||

While the above example works a good proof-of-concept for functionality, it's not overly useful, so let's look at additional examples.

Example #2: Reformatting Log File Data

Once you have established that you can retrieve data from your custom plug-in, you can start taking advantage of Log Parser's features to process your log file data. In this example, I will use several of the built-in functions to reformat the data:

logparser "SELECT id AS ID, TO_DATE(publish_date) AS Date, author AS Author, SUBSTR(title,0,20) AS Title, STRCAT(TO_STRING(TO_INT(FLOOR(price))),SUBSTR(TO_STRING(price),INDEX_OF(TO_STRING(price),'.'),3)) AS Price FROM 'C:\sample\books.log'" -i:COM -iProgID:Generic.LogParser.Scriptlet

The above query will return results like the following:

| ID | Date | Author | Title | Price | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ----- | ---------- | -------------------- | -------------------- | ----- | ||||||||||

| bk101 | 10/1/2000 | Gambardella, Matthew | XML Developer's Guid | 44.95 | ||||||||||

| bk102 | 12/16/2000 | Ralls, Kim | Midnight Rain | 5.95 | ||||||||||

| bk103 | 11/17/2000 | Corets, Eva | Maeve Ascendant | 5.95 | ||||||||||

| bk104 | 3/10/2001 | Corets, Eva | Oberon's Legacy | 5.95 | ||||||||||

| bk105 | 9/10/2001 | Corets, Eva | The Sundered Grail | 5.95 | ||||||||||

| bk106 | 9/2/2000 | Randall, Cynthia | Lover Birds | 4.95 | ||||||||||

| bk107 | 11/2/2000 | Thurman, Paula | Splish Splash | 4.95 | ||||||||||

| bk108 | 12/6/2000 | Knorr, Stefan | Creepy Crawlies | 4.95 | ||||||||||

| bk109 | 11/2/2000 | Kress, Peter | Paradox Lost | 6.95 | ||||||||||

| bk110 | 12/9/2000 | O'Brien, Tim | Microsoft .NET: The | 36.95 | ||||||||||

| bk111 | 12/1/2000 | O'Brien, Tim | MSXML3: A Comprehens | 36.95 | ||||||||||

| bk112 | 4/16/2001 | Galos, Mike | Visual Studio 7: A C | 49.95 | ||||||||||

|

||||||||||||||

This example reformats the dates and prices a little nicer, and it truncates the book titles at 20 characters so they fit a little better on some screens.

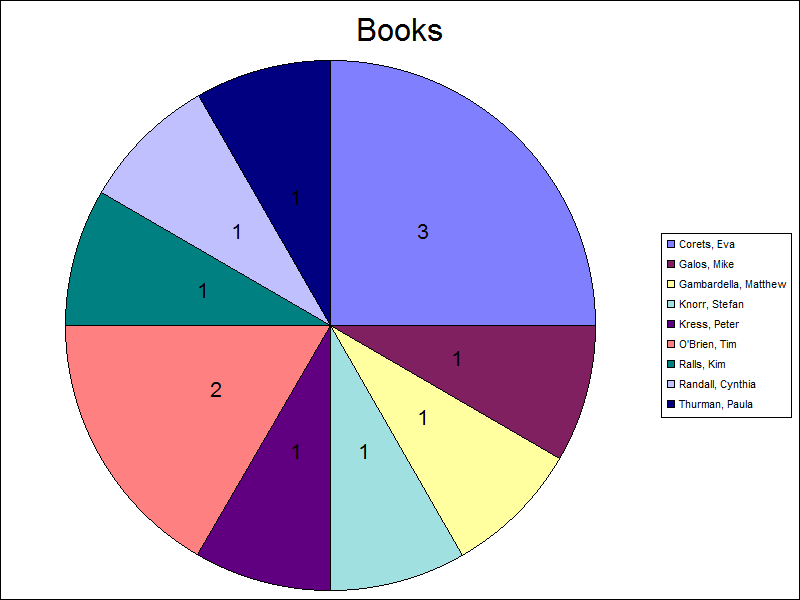

Example #3: Processing Log File Data

In addition to simply reformatting your data, you can use Log Parser to group, sort, count, total, etc., your data. The following example illustrates how to use Log Parser to count the number of books by author in the log file:

logparser "SELECT author AS Author, COUNT(Title) AS Books FROM 'C:\sample\books.log' GROUP BY Author ORDER BY Author" -i:COM -iProgID:Generic.LogParser.Scriptlet

The above query will return results like the following:

| Author | Books | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| -------------------- | ----- | ||||||||||

| Corets, Eva | 3 | ||||||||||

| Galos, Mike | 1 | ||||||||||

| Gambardella, Matthew | 1 | ||||||||||

| Knorr, Stefan | 1 | ||||||||||

| Kress, Peter | 1 | ||||||||||

| O'Brien, Tim | 2 | ||||||||||

| Ralls, Kim | 1 | ||||||||||

| Randall, Cynthia | 1 | ||||||||||

| Thurman, Paula | 1 | ||||||||||

|

|||||||||||

The results are pretty straight-forward: Log Parser parses the data and presents you with a list of alphabetized authors and the total number of books that were written by each author.

Example #4: Creating Charts

You can also use data from your custom log file to create charts through Log Parser. If I modify the above example, all that I need to do is add a few parameters to create a chart:

logparser "SELECT author AS Author, COUNT(Title) AS Books INTO Authors.gif FROM 'C:\sample\books.log' GROUP BY Author ORDER BY Author" -i:COM -iProgID:Generic.LogParser.Scriptlet -fileType:GIF -groupSize:800x600 -chartType:Pie -categories:OFF -values:ON -legend:ON

The above query will create a chart like the following:

I admit that it's not a very pretty-looking chart - you can look at the other posts in my Log Parser series for some examples about making Log Parser charts more interesting.

Summary

In this blog post and my last post, I have illustrated a few examples that should help developers get started writing their own custom input format plug-ins for Log Parser. As I mentioned in each of the blog posts where I have used scriptlets for the COM objects, I would typically use C# or C++ to create a COM component, but using a scriptlet is much easier for demos because it doesn't require installing Visual Studio and compiling a DLL.

There is one last thing that I would like to mention before I finish this blog; I mentioned earlier that I had used Log Parser to reformat the sample Books.xml file into a generic log file that I could use for the examples in this blog. Since Log Parser supports XML as an input format and it allows you to customize your output, I wrote the following simple Log Parser query to reformat the XML data into a format that I had often seen used for text-based log files:

logparser.exe "SELECT id,publish_date,author,title,price INTO books.log FROM books.xml" -i:xml -o:tsv -headers:ON -oSeparator:"|"

Actually, this ability to change data formats is one of the hidden gems of Log Parser; I have often used Log Parser to change the data from one type of log file to another - usually so that a different program can access the data. For example, if you were given the log file with a pipe ("|") delimiter like I used as an example, you could easily use Log Parser to convert that data into the CSV format so you could open it in Excel:

logparser.exe "SELECT id,publish_date,author,title,price INTO books.csv FROM books.log" -i:tsv -o:csv -headers:ON -iSeparator:"|" -oDQuotes:on

I hope these past few blog posts help you to get started writing your own custom input format plug-ins for Log Parser.

That's all for now. ;-)

Note: This blog was originally posted at http://blogs.msdn.com/robert_mcmurray/

Advanced Log Parser Part 6 - Creating a Simple Custom Input Format Plug-In

27 February 2013 • by Bob • LogParser, Scripting

In Part 4 of this series, I illustrated how to create a new COM-based input provider for Log Parser from a custom input format:

Advanced Log Parser Charts Part 4 - Adding Custom Input Formats

For the sample that I published in that blog, I wrote a plug-in that consumed FTP RSCA events, which is highly structured data, and it added a lot of complexity to my example. In the past ten months or so since I published my original blog, I've had several requests for additional information about how to get started writing COM-based input formats for Log Parser, so it occurred to me that perhaps I could have shown a simpler example to get people started instead of diving straight into parsing RSCA data. ;-)

With that in mind, I thought that I would write a couple of blog posts with simpler examples to help anyone who wants to get started writing custom input formats for Log Parser.

For this blog post, I will show you how to create a very basic COM-based input format provider for Log Parser that simply returns static data; you could use this sample as a template to quickly get up-and-running with the basic concepts. (I promise to follow this blog with another real-world example that is still easier-to-use than my RSCA example.)

A Reminder about Creating COM-based plug-ins for Log Parser

In the blog that I referred to earlier, I mentioned that a COM plug-in has to support the following public methods:

| Method Name | Description |

|---|---|

OpenInput |

Opens your data source and sets up any initial environment settings. |

GetFieldCount |

Returns the number of fields that your plug-in will provide. |

GetFieldName |

Returns the name of a specified field. |

GetFieldType |

Returns the datatype of a specified field. |

GetValue |

Returns the value of a specified field. |

ReadRecord |

Reads the next record from your data source. |

CloseInput |

Closes your data source and cleans up any environment settings. |

Once you have created and registered a COM plug-in, you call it by using something like the following syntax:

logparser.exe "SELECT * FROM FOO" -i:COM -iProgID:BAR

In the preceding example, FOO is a data source that makes sense to your plug-in, and BAR is the COM class name for your plug-in.

Creating a Simple COM plug-in for Log Parser

Once again, I'm going to demonstrate how to create a COM component by using a scriptlet, which I like to use for demos because they are quick to design, they're easily portable, and updates take place immediately since no compilation is required. (All of that being said, if I were writing a real COM plug-in for Log Parser, I would use C# or C++.)

To create the sample COM plug-in, copy the following code into a text file, and save that file as "Simple.LogParser.Scriptlet.sct" to your computer. (Note: The *.SCT file extension tells Windows that this is a scriptlet file.)

<SCRIPTLET> <registration Description="Simple Log Parser Scriptlet" Progid="Simple.LogParser.Scriptlet" Classid="{4e616d65-6f6e-6d65-6973-526f62657274}" Version="1.00" Remotable="False" /> <comment> EXAMPLE: logparser "SELECT * FROM FOOBAR" -i:COM -iProgID:Simple.LogParser.Scriptlet </comment> <implements id="Automation" type="Automation"> <method name="OpenInput"> <parameter name="strValue"/> </method> <method name="GetFieldCount" /> <method name="GetFieldName"> <parameter name="intFieldIndex"/> </method> <method name="GetFieldType"> <parameter name="intFieldIndex"/> </method> <method name="ReadRecord" /> <method name="GetValue"> <parameter name="intFieldIndex"/> </method> <method name="CloseInput"> <parameter name="blnAbort"/> </method> </implements> <SCRIPT LANGUAGE="VBScript"> Option Explicit Const MAX_RECORDS = 5 Dim intRecordCount ' -------------------------------------------------------------------------------- ' Open the input session. ' -------------------------------------------------------------------------------- Public Function OpenInput(strValue) intRecordCount = 0 End Function ' -------------------------------------------------------------------------------- ' Close the input session. ' -------------------------------------------------------------------------------- Public Function CloseInput(blnAbort) End Function ' -------------------------------------------------------------------------------- ' Return the count of fields. ' -------------------------------------------------------------------------------- Public Function GetFieldCount() GetFieldCount = 5 End Function ' -------------------------------------------------------------------------------- ' Return the specified field's name. ' -------------------------------------------------------------------------------- Public Function GetFieldName(intFieldIndex) Select Case CInt(intFieldIndex) Case 0: GetFieldName = "INTEGER" Case 1: GetFieldName = "REAL" Case 2: GetFieldName = "STRING" Case 3: GetFieldName = "TIMESTAMP" Case 4: GetFieldName = "NULL" Case Else GetFieldName = Null End Select End Function ' -------------------------------------------------------------------------------- ' Return the specified field's type. ' -------------------------------------------------------------------------------- Public Function GetFieldType(intFieldIndex) ' Define the field type constants. Const TYPE_INTEGER = 1 Const TYPE_REAL = 2 Const TYPE_STRING = 3 Const TYPE_TIMESTAMP = 4 Const TYPE_NULL = 5 Select Case CInt(intFieldIndex) Case 0: GetFieldType = TYPE_INTEGER Case 1: GetFieldType = TYPE_REAL Case 2: GetFieldType = TYPE_STRING Case 3: GetFieldType = TYPE_TIMESTAMP Case 4: GetFieldType = TYPE_NULL Case Else GetFieldType = Null End Select End Function ' -------------------------------------------------------------------------------- ' Return the specified field's value. ' -------------------------------------------------------------------------------- Public Function GetValue(intFieldIndex) Select Case CInt(intFieldIndex) Case 0: GetValue = 1 Case 1: GetValue = 1.0 Case 2: GetValue = "One" Case 3: GetValue = Now Case Else GetValue = Null End Select End Function ' -------------------------------------------------------------------------------- ' Read the next record, and return true or false if there is more data. ' -------------------------------------------------------------------------------- Public Function ReadRecord() intRecordCount = intRecordCount + 1 If intRecordCount <= MAX_RECORDS Then ReadRecord = True Else ReadRecord = False End If End Function </SCRIPT> </SCRIPTLET>

After you have saved the scriptlet code to your computer, you register it by using the following syntax:

regsvr32 Simple.LogParser.Scriptlet.sct

At the very minimum, you can now use the COM plug-in with Log Parser by using syntax like the following:

logparser "SELECT * FROM FOOBAR" -i:COM -iProgID:Simple.LogParser.Scriptlet

This will return results like the following:

INTEGER | REAL | STRING | TIMESTAMP | NULL |

|---|---|---|---|---|

------- | -------- | ------ | ------------------ | ---- |

1 |

1.000000 |

One |

2/26/2013 19:42:12 |

- |

1 |

1.000000 |

One |

2/26/2013 19:42:12 |

- |

1 |

1.000000 |

One |

2/26/2013 19:42:12 |

- |

1 |

1.000000 |

One |

2/26/2013 19:42:12 |

- |

1 |

1.000000 |

One |

2/26/2013 19:42:12 |

- |

Statistics: |

||||

----------- |

||||

Elements processed: |

5 |

|||

Elements output: |

5 |

|||

Execution time: |

0.01 seconds |

Next, let's analyze what this sample does.

Examining the Sample Scriptlet Contents in Detail

Here are the different parts of the scriptlet and what they do:

- The <registration> section of the scriptlet sets up the COM registration information; you'll notice the COM component class name and GUID, as well as version information and a general description. (Note that you should generate your own GUID for each scriptlet that you create.)

- The <implements> section declares the public methods that the COM plug-in has to support.

- The <script>section contains the actual implementation:

- The

OpenInput()method opens your data source, although in this example it only initializes the record count. (Note that the value that is passed to the method will be ignored in this example.) - The

CloseInput()method would normally clean up your session, (e.g. close a data file or database, etc.), but it doesn't do anything in this example. - The

GetFieldCount()method returns the number of data fields in each record of your data, which is static in this example. - The

GetFieldName()method returns the name of a field that is passed to the method as a number; the names are static in this example. - The

GetFieldType()method returns the data type of a field that is passed to the method as a number, which are statically-defined in this example. As a reminder, Log Parser supports the following five data types for COM plug-ins:TYPE_INTEGER,TYPE_REAL,TYPE_STRING,TYPE_TIMESTAMP, andTYPE_NULL. - The

GetValue()method returns the data value of a field that is passed to the method as a number. Once again, the data values are statically-defined in this example. - The

ReadRecord()method moves to the next record in your data set; this method returns True if there is data to read, or False when the end of data is reached. In this example, the method increments the record counter and sets the status based on whether the maximum number of records has been reached.

- The

Summary

That wraps up the simplest example that I could put together of a COM-based input provider for Log Parser. In my next blog, I'll show how to create a generic COM-based input provider for Log Parser that you can use to parse text-based log files.

Note: This blog was originally posted at http://blogs.msdn.com/robert_mcmurray/

Advanced Log Parser Charts Part 5 - Creating a Generic Chart Color Script

25 August 2012 • by Bob • LogParser, Scripting

In Part 5 of this series, I'll show you how to create a generic script that you can use to add some color to your Log Parser charts. As I mentioned in Part 1 of this series, the default colors for Log parser charts are really dull and boring. For example, if I parse one month's worth of log files from one of my low-volume websites with the following query:

logparser.exe "SELECT date,COUNT(*) AS Hits INTO HITS.gif FROM *.log GROUP BY date ORDER BY date" -i:w3c -o:CHART -chartType:ColumnClustered -chartTitle:"" -q:ON

Log Parser will create the following ugly daily hits chart:

Generic Color Change Script

Here's the background story for this blog: I have a collection of scripts that I use to format my charts, several of which have faithfully served as the fodder for this blog series. With that in mind, I had a situation recently where I was querying logs with a series of data just like this, and of course the resulting charts were kind of hideous to look at. In one of the scripts that I often use, I create an array of colors to use, and then I apply the various colors to the individual data points in the series.

In the past I have always hard-coded the length for the array of colors based on the data that I am working with, but in this situation I had no idea how many data points I would have, so I decided to put together a quick script with an array that would work with a series of any size.

Here's the resulting script:

// Set a default color for the chart's data. chart.SeriesCollection(0).Interior.Color = "#ffcccc"; // Define a short array of colors. var colors = [ "#ffff99", "#ff99ff", "#ff9999", "#99ffff", "#99ff99", "#9999ff", "#ffffcc", "#ffccff", "#ffcccc", "#ccffff", "#ccffcc", "#ccccff" ]; // Loop through the data points in the series. for (x=0;x<chart.SeriesCollection(0).Points.Count;++x) { // Set the color for the data point based on modulo division of the array length. chart.SeriesCollection(0).Points(x).Interior.Color = colors[x % colors.length ]; }

That's all that there is to the script - it's pretty simple. If I take the above script and save it as "FormatChart.js", I can use that script with my Log Parser query from earlier by adding an extra parameter to the command:

logparser.exe "SELECT date,COUNT(*) AS Hits INTO HITS.gif FROM *.log GROUP BY date ORDER BY date" -i:w3c -o:CHART -chartType:ColumnClustered -chartTitle:"" -q:ON -config:FormatChart.js

Now Log Parser will create the following daily hits chart with a great deal more color to it:

Okay - perhaps that's not the best color palette, but you get the idea. It looks even better when I change the query to use 3D charts:

logparser.exe "SELECT date,COUNT(*) AS Hits INTO HITS.gif FROM *.log GROUP BY date ORDER BY date" -i:w3c -o:CHART -chartType:Column3D -chartTitle:"" -q:ON -config:FormatChart.js

The above query creates the following chart:

Color Changing Pie Charts

I'd like to make a quick change to the script in order to make it work a little better with a pie chart:

// Set a default color for the chart's data. chart.SeriesCollection(0).Interior.Color = "#cccccc"; // Define a short array of colors. var colors = [ "#cc3333", "#3333cc", "#33cc33", "#33cccc", "#cccc33", "#cc33cc" ]; // Loop through the data points in the series. for (x=0;x<chart.SeriesCollection(0).Points.Count;++x) { // Set the color for the data point based on modulo division of the array length. chart.SeriesCollection(0).Points(x).Interior.Color = colors[x % colors.length ]; } // Rotate the chart 180 degrees - just so it looks a little better. chartSpace.Charts(0).PlotArea.RotateClockwise(); chartSpace.Charts(0).PlotArea.RotateClockwise();

For this query I'd like to see a break down by HTTP status, and this necessitates some small change to the Log parser query:

logparser.exe "SELECT sc-status AS Status,COUNT(*) AS Hits INTO HITS.gif FROM *.log GROUP BY Status ORDER BY Status" -i:w3c -o:CHART -chartType:PieExploded3D -chartTitle:"" -q:ON -config:FormatChart.js

The above query creates the following chart:

Summary

That wraps it up for this blog - I hope that I've given you some ideas for ways that you can easily add some colors to some dull-looking Log Parser charts.

Note: This blog was originally posted at http://blogs.msdn.com/robert_mcmurray/

Advanced Log Parser Charts Part 4 - Adding Custom Input Formats

25 May 2012 • by Bob • IIS, LogParser

In Part 4 of this series, I'll show you how you can do a couple of cool things:

- Create a new input format for Log Parser (and I'll use FTP RSCA data as an example)

- Create charts from your custom input format

For the data source for my custom plug-in, I thought that it would be cool to consume the data from FTP 7's Runtime Status (RSCA). If you've followed some of my old blogs, you would have seen that around five years ago I wrote the following pair of blogs about programmatically viewing FTP 7 sessions:

I'm going to recycle some of the FTP RSCA concepts from those blogs in order to create my COM plug-in.

Log Parser Input Formats

If you're like me, you already realize that the existing features of Log Parser simply rock. But what most people don't realize is that Log Parser lets you extend the functionality by adding new input formats, so you can consume the data from any place where you feel compelled to sit down and write your own Log Parser module.

As a quick reminder, Log Parser supports the following built-in input formats:

- IIS Log File Input Formats

- IISW3C: parses IIS log files in the W3C Extended Log File Format.

- IIS: parses IIS log files in the Microsoft IIS Log File Format.

- BIN: parses IIS log files in the Centralized Binary Log File Format.

- IISODBC: returns database records from the tables logged to by IIS when configured to log in the ODBC Log Format.

- HTTPERR: parses HTTP error log files generated by Http.sys.

- URLSCAN: parses log files generated by the URLScan IIS filter.

- Generic Text File Input Formats

- CSV: parses comma-separated values text files.

- TSV: parses tab-separated and space-separated values text files.

- XML: parses XML text files.

- W3C: parses text files in the W3C Extended Log File Format.

- NCSA: parses web server log files in the NCSA Common, Combined, and Extended Log File Formats.

- TEXTLINE: returns lines from generic text files.

- TEXTWORD: returns words from generic text files.

- System Information Input Formats

- EVT: returns events from the Windows Event Log and from Event Log backup files (.evt files).

- FS: returns information on files and directories.

- REG: returns information on registry values.

- ADS: returns information on Active Directory objects.

- Special-purpose Input Formats

- NETMON: parses network capture files created by NetMon.

- ETW: parses Enterprise Tracing for Windows trace log files and live sessions.

- COM: provides an interface to Custom Input Format COM plug-ins.

This last input format, COM, is how you interface with Log Parser in order to create your own input formats. When you install Log Parser, there are a few COM-based samples in the Log Parser directory, and you can take a look at those when you get the chance.

A Brief Introduction to Creating a COM plug-ins for Log Parser

To start with, your COM plug-in has to support a few public methods - and each of these will be more clear when I create my plug-in later:

| Method Name | Description |

|---|---|

OpenInput |

Opens your data source and sets up any initial environment settings. |

GetFieldCount |

Returns the number of fields that your plug-in will provide. |

GetFieldName |

Returns the name of a specified field. |

GetFieldType |

Returns the datatype of a specified field. |

GetValue |

Returns the value of a specified field. |

ReadRecord |

Reads the next record from your data source. |

CloseInput |

Closes your data source and cleans up any environment settings. |

After you've created and registered your COM plug-in, you will call it by using something like the following syntax:

logparser "SELECT * FROM FOO" -i:COM -iProgID:BAR

In this example, FOO is some data source that makes sense to your plug-in, and BAR is the COM class name for your plug-in.

Creating the COM plug-in for FTP RSCA Data

I'm going to demonstrate how to create a COM component as a scriptlet, and then I'll call that from Log Parser to process the data. I chose to use a scriptlet for this demo because they are quick to design and they're easily portable. Since no compilation is required, updates take place on the fly. All of that being said, if I were writing a real COM plug-in for Log Parser, I would use C# or C++.

To create the sample COM plug-in, copy the following code into a text file, and save that file as "MSUtil.LogQuery.FtpRscaScriptlet.sct" to your computer. (Note: The *.SCT file extension tells Windows that this is a scriptlet file.)

<SCRIPTLET> <registration Description="FTP RSCA for Log Parser Scriptlet" Progid="MSUtil.LogQuery.FtpRscaScriptlet" Classid="{4e616d65-6f6e-6d65-6973-526f62657274}" Version="1.00" Remotable="False" /> <comment> EXAMPLE 1: logparser "SELECT * FROM ftp.example.com" -i:COM -iProgID:MSUtil.LogQuery.FtpRscaScriptlet EXAMPLE 2: logparser "SELECT * FROM 1" -i:COM -iProgID:MSUtil.LogQuery.FtpRscaScriptlet </comment> <implements id="Automation" type="Automation"> <method name="OpenInput"> <parameter name="strValue"/> </method> <method name="GetFieldCount" /> <method name="GetFieldName"> <parameter name="intFieldIndex"/> </method> <method name="GetFieldType"> <parameter name="intFieldIndex"/> </method> <method name="ReadRecord" /> <method name="GetValue"> <parameter name="intFieldIndex"/> </method> <method name="CloseInput"> <parameter name="blnAbort"/> </method> </implements> <SCRIPT LANGUAGE="VBScript"> Option Explicit Dim objAdminManager,objSessionDictionary Dim objSitesSection,objSitesCollection Dim objSiteElement,objFtpServerElement Dim objSessionsElement,objSessionElement Dim intSiteElementPos,intSession,intRecordIndex Dim clsSession intRecordIndex = -1 ' -------------------------------------------------------------------------------- ' Open an input session that reads FTP RSCA data and stores it in a dictionary object. ' -------------------------------------------------------------------------------- Public Function OpenInput(strValue) Set objSessionDictionary = CreateObject("Scripting.Dictionary") Set objAdminManager = CreateObject("Microsoft.ApplicationHost.WritableAdminManager") objAdminManager.CommitPath = "MACHINE/WEBROOT/APPHOST" Set objSitesSection = objAdminManager.GetAdminSection("system.applicationHost/sites", "MACHINE/WEBROOT/APPHOST") Set objSitesCollection = objSitesSection.Collection If IsNumeric(strValue) Then intSiteElementPos = FindElement(objSitesCollection, "site", Array("id", strValue)) Else intSiteElementPos = FindElement(objSitesCollection, "site", Array("name", strValue)) End If If intSiteElementPos > -1 Then Set objSiteElement = objSitesCollection.Item(intSiteElementPos) Set objFtpServerElement = objSiteElement.ChildElements.Item("ftpServer") Set objSessionsElement = objFtpServerElement.ChildElements.Item("sessions").Collection For intSession = 0 To CLng(objSessionsElement.Count)-1 Set objSessionElement = objSessionsElement.Item(intSession) Set clsSession = New Session clsSession.CurrentDateTime = GetUtcDate() clsSession.ClientIp = objSessionElement.GetPropertyByName("clientIp").Value clsSession.SessionId = objSessionElement.GetPropertyByName("sessionId").Value clsSession.SessionStartTime = objSessionElement.GetPropertyByName("sessionStartTime").Value clsSession.UserName = objSessionElement.GetPropertyByName("userName").Value clsSession.CurrentCommand = objSessionElement.GetPropertyByName("currentCommand").Value clsSession.PreviousCommand = objSessionElement.GetPropertyByName("previousCommand").Value clsSession.CommandStartTime = objSessionElement.GetPropertyByName("commandStartTime").Value clsSession.BytesSent = objSessionElement.GetPropertyByName("bytesSent").Value clsSession.BytesReceived = objSessionElement.GetPropertyByName("bytesReceived").Value clsSession.LastErrorStatus = objSessionElement.GetPropertyByName("lastErrorStatus").Value objSessionDictionary.Add intSession,clsSession Next End If End Function ' -------------------------------------------------------------------------------- ' Close the input session. ' -------------------------------------------------------------------------------- Public Function CloseInput(blnAbort) intRecordIndex = -1 objSessionDictionary.RemoveAll End Function ' -------------------------------------------------------------------------------- ' Return the count of fields. ' -------------------------------------------------------------------------------- Public Function GetFieldCount() GetFieldCount = 11 End Function ' -------------------------------------------------------------------------------- ' Return the specified field's name. ' -------------------------------------------------------------------------------- Public Function GetFieldName(intFieldIndex) Select Case intFieldIndex Case 0 GetFieldName = "currentDateTime" Case 1 GetFieldName = "clientIp" Case 2 GetFieldName = "sessionId" Case 3 GetFieldName = "sessionStartTime" Case 4 GetFieldName = "userName" Case 5 GetFieldName = "currentCommand" Case 6 GetFieldName = "previousCommand" Case 7 GetFieldName = "commandStartTime" Case 8 GetFieldName = "bytesSent" Case 9 GetFieldName = "bytesReceived" Case 10 GetFieldName = "lastErrorStatus" End Select End Function ' -------------------------------------------------------------------------------- ' Return the specified field's type. ' -------------------------------------------------------------------------------- Public Function GetFieldType(intFieldIndex) Const TYPE_INTEGER = 1 Const TYPE_REAL = 2 Const TYPE_STRING = 3 Const TYPE_TIMESTAMP = 4 Const TYPE_NULL = 5 Select Case intFieldIndex Case 0 GetFieldType = TYPE_STRING Case 1 GetFieldType = TYPE_STRING Case 2 GetFieldType = TYPE_STRING Case 3 GetFieldType = TYPE_STRING Case 4 GetFieldType = TYPE_STRING Case 5 GetFieldType = TYPE_STRING Case 6 GetFieldType = TYPE_STRING Case 7 GetFieldType = TYPE_STRING Case 8 GetFieldType = TYPE_INTEGER Case 9 GetFieldType = TYPE_INTEGER Case 10 GetFieldType = TYPE_INTEGER End Select End Function ' -------------------------------------------------------------------------------- ' Return the specified field's value. ' -------------------------------------------------------------------------------- Public Function GetValue(intFieldIndex) If objSessionDictionary.Count > 0 Then Select Case intFieldIndex Case 0 GetValue = objSessionDictionary(intRecordIndex).CurrentDateTime Case 1 GetValue = objSessionDictionary(intRecordIndex).ClientIp Case 2 GetValue = objSessionDictionary(intRecordIndex).SessionId Case 3 GetValue = objSessionDictionary(intRecordIndex).SessionStartTime Case 4 GetValue = objSessionDictionary(intRecordIndex).UserName Case 5 GetValue = objSessionDictionary(intRecordIndex).CurrentCommand Case 6 GetValue = objSessionDictionary(intRecordIndex).PreviousCommand Case 7 GetValue = objSessionDictionary(intRecordIndex).CommandStartTime Case 8 GetValue = objSessionDictionary(intRecordIndex).BytesSent Case 9 GetValue = objSessionDictionary(intRecordIndex).BytesReceived Case 10 GetValue = objSessionDictionary(intRecordIndex).LastErrorStatus End Select End If End Function ' -------------------------------------------------------------------------------- ' Read the next record, and return true or false if there is more data. ' -------------------------------------------------------------------------------- Public Function ReadRecord() If objSessionDictionary.Count > 0 Then If intRecordIndex < (objSessionDictionary.Count-1) Then intRecordIndex = intRecordIndex + 1 ReadRecord = True Else ReadRecord = False End If End If End Function ' -------------------------------------------------------------------------------- ' Return the current UTC date/time. ' -------------------------------------------------------------------------------- Private Function GetUtcDate() Dim dtmNow,dtmUtc,strUtc Dim objShell,lngActiveTimeBias dtmNow = Now() Set objShell = CreateObject("WScript.Shell") lngActiveTimeBias = CLng(objShell.RegRead("HKEY_LOCAL_MACHINE\System\CurrentControlSet\Control\TimeZoneInformation\ActiveTimeBias")) dtmUtc = DateAdd("n",lngActiveTimeBias,dtmNow) strUtc = Year(dtmUtc) & "-" & _ Right("0" & Month(dtmUtc),2) & "-" & _ Right("0" & Day(dtmUtc),2) & "T" & _ Right("0" & Hour(dtmUtc),2) & ":" & _ Right("0" & Minute(dtmUtc),2) & ":" & _ Right("0" & Second(dtmUtc),2) & ".000Z" GetUtcDate = strUtc End Function ' -------------------------------------------------------------------------------- ' Return an element's position in a collection. ' -------------------------------------------------------------------------------- Private Function FindElement(objCollection, strElementTagName, arrValuesToMatch) Dim i,elem,matches,j,prop,value For i = 0 To CInt(objCollection.Count) - 1 Set elem = objCollection.Item(i) If elem.Name = strElementTagName Then matches = True For j = 0 To UBound(arrValuesToMatch) Step 2 Set prop = elem.GetPropertyByName(arrValuesToMatch(j)) value = prop.Value If Not IsNull(value) Then value = CStr(value) End If If Not value = CStr(arrValuesToMatch(j + 1)) Then matches = False Exit For End If Next If matches Then Exit For End If End If Next If matches Then FindElement = i Else FindElement = -1 End If End Function ' -------------------------------------------------------------------------------- ' Define a generic class for holding session data. ' -------------------------------------------------------------------------------- Class Session Public CurrentDateTime Public ClientIp Public SessionId Public SessionStartTime Public UserName Public CurrentCommand Public PreviousCommand Public CommandStartTime Public BytesSent Public BytesReceived Public LastErrorStatus End Class </SCRIPT> </SCRIPTLET>

After you've saved the scriptlet code to your computer, you will register it by using the following syntax:

regsvr32 MSUtil.LogQuery.FtpRscaScriptlet.sct

At the very minimum, you can now use the COM plug-in with Log Parser by using syntax like the following:

logparser "SELECT * FROM ftp.example.com" -i:COM -iProgID:MSUtil.LogQuery.FtpRscaScriptlet

Next, we'll analyze what the scriptlet does.

Examining the COM plug-in in Detail

Here are the different parts of the scriptlet and what they do:

- The <registration> section of the scriptlet sets up the COM registration information; you'll notice the COM component class name and GUID, as well as version information and a general description.

- The <implements> section declares the public methods that the COM plug-in has to support.

- The <script> section contains the actual implementation:

- The first part of the script section declares the global variables that will be used.

- The second part of the script contains the required methods:

- The

OpenInput()method opens the FTP RSCA data for a specific FTP site:- The site will be specified in your Log Parser query, and the COM plug-in supports using either the site name or the site's numeric ID:

- "

SELECT * FROM ftp.example.com" - "

SELECT * FROM 1"

- "

- All of the RSCA data for the site in stored in classes that will be cached in a global dictionary for quick access

- The site will be specified in your Log Parser query, and the COM plug-in supports using either the site name or the site's numeric ID:

- The

CloseInput()method doesn't do much in this script, but your COM plug-ins may require more clean up depending on your data source. - The

GetFieldCount()method simply returns the number of data fields in each record of your data. - The

GetFieldName()method returns the name of a field that is passed to the method as a number. - The

GetFieldType()method returns the data type of a field that is passed to the method as a number; Log Parser supports the following five data types for COM plug-ins:TYPE_INTEGERTYPE_REALTYPE_STRINGTYPE_TIMESTAMPTYPE_NULL

- The

GetValue()method returns the data value of a field that is passed to the method as a number. - The

ReadRecord()method moves to the next record in your data set; this method returns True if there is data to read, or False when the end of data is reached.

- The

- The third part of the script contains some helper features:

- The

GetUtcDate()method returns the current date and time in Universal Coordinated Time (UTC) format. - The

FindElement()method locates a specified element's position within an IIS collection, or -1 if the element cannot be found. This method is used to determine the specified FTP site within the IIS configuration. - The

Sessionclass is a generic construct to hold the information for a single FTP RSCA data record.

- The

This wraps up the description of how the scriptlet works as a COM plug-in, in the next part of my blog we'll look at how to actually use it.

Using the COM plug-in with Log Parser

Earlier I showed you how you can use the COM plug-in with Log Parser by using syntax like the following:

logparser "SELECT * FROM ftp.example.com" -i:COM -iProgID:MSUtil.LogQuery.FtpRscaScriptlet

This will return output that resembles something like the following:

currentDateTime |

clientIp |

sessionId |

sessionStartTime |

userName |

currentCommand |

previousCommand |

commandStartTime |

bytesSent |

bytesReceived |

lastErrorStatus |

|---|---|---|---|---|---|---|---|---|---|---|

---------------- |

-------- |

--------- |

---------------- |

-------- |

-------------- |

--------------- |

---------------- |

--------- |

------------- |

--------------- |

2012-05-25T11:42:11.000Z |

10.121.75.26 |

3950d1e5-3e94-4734-a89a-9768c52aa924 |

2012-05-25T10:08:09.861Z |

robert |

PASS |

USER |

2012-05-25T11:42:06.080Z |

6049 |

1193 |

0 |

2012-05-25T11:42:11.000Z |

10.121.75.26 |

d1591fa8-3b09-4afd-b2c0-950421ba79fe |

2012-05-25T10:08:18.184Z |

robert |

RETR |

NLST |

2012-05-25T11:42:07.172Z |

5887 |

1169 |

0 |

2012-05-25T11:42:11.000Z |

10.121.75.26 |

0f92b5ed-920a-441d-a15d-39056a36f2a4 |

2012-05-25T10:08:22.327Z |

robert |

NOOP |

NLST |

2012-05-25T11:41:40.917Z |

5857 |

1163 |

0 |

2012-05-25T11:42:11.000Z |

10.121.75.26 |

16925f0d-1fc5-4cb7-be19-ab33face2da9 |

2012-05-25T10:08:48.756Z |

NLST |

SYST |

2012-05-25T11:41:44.770Z |

6026 |

1192 |

0 |

|

2012-05-25T11:42:11.000Z |

10.121.75.26 |

aeb68389-869b-4afc-8c81-47b578e74824 |

2012-05-25T10:08:54.214Z |

USER |

HOST |

2012-05-25T11:41:42.087Z |

5864 |

1168 |

0 |

|

2012-05-25T11:42:11.000Z |

10.121.75.26 |

4ed55569-ee25-47d1-8388-12cdb90a1c07 |

2012-05-25T10:12:31.555Z |

alice |

RETR |

NLST |

2012-05-25T11:42:01.789Z |

5780 |

1138 |

0 |

2012-05-25T11:42:11.000Z |

10.121.75.26 |

d6b16bb4-cb65-492d-a9fa-fbd6b72de0f3 |

2012-05-25T10:12:54.591Z |

bob |

NOOP |

NLST |

2012-05-25T11:41:46.563Z |

5748 |

1130 |

0 |

Statistics: |

||||||||||

----------- |

||||||||||

Elements processed: |

7 |

|||||||||

Elements output: |

7 |

|||||||||

Execution time: |

0.12 seconds |

That information is something of a jumbled mess, and we can clean that up a bit by simply choosing the fields that we might be interested in:

userName |

currentCommand |

commandStartTime |

|---|---|---|

-------- |

-------------- |

---------------- |

robert |

PASS |

2012-05-25T11:42:06.080Z |

robert |

RETR |

2012-05-25T11:42:07.172Z |

robert |

NOOP |

2012-05-25T11:41:40.917Z |

NLST |

2012-05-25T11:41:44.770Z |

|

USER |

2012-05-25T11:41:42.087Z |

|

alice |

RETR |

2012-05-25T11:42:01.789Z |

bob |

NOOP |

2012-05-25T11:41:46.563Z |

Statistics: |

||

----------- |

||

Elements processed: |

7 |

|

Elements output: |

7 |

|

Execution time: |

0.12 seconds |

Now let's look at some interesting data - one of the main focuses for this blog series is charting with Log Parser, so let's look at doing something useful with the data. To start with, here's how to create a pie chart that counts the number of sessions by user name:

logparser "SELECT

This will generate a chart like the following:

Here's a variation on that script that illustrates how to create a pie chart that counts the number of authenticated sessions versus anonymous sessions:

logparser "SELECT

This will generate a chart like the following:

We can also do line, bar, and column charts with the data:

logparser "SELECT

The above code sample will generate a chart like the following:

There's a lot more that we could do with this, but eventually I have to get some sleep, so I think that's enough fun for the day.

Summary

In this blog post, I've shown you how to add your own custom input format to Log Parser by creating scriptlet as a COM plug-in. I hope that you take this information and create some great Log Parser plug-ins of your own.

;-]

Advanced Log Parser Charts Part 3 - Missing Office Web Components for Charting

25 May 2012 • by Bob • IIS, LogParser

In Part 3 of this series, I'll explain what to do when you're missing the Office Web Components that are required for creating the charts that I have been demonstrating in this series.

Here's a brief explanation of the symptoms: you try a simple query that will create a chart like the following example:

logparser.exe "SELECT Date,

And you get the following error message:

Error creating output format "CHART": This output format requires a licensed Microsoft Office Chart Web Component to be installed on the local machine

More often than not, this simply means that you have Office 2007 or Office 2010, which do not contain the Office Web Components that are used by Log Parser to create charts. Fortunately, you can download the missing components from the following URL on Microsoft's website:

Office 2003 Add-in: Office Web Components

http://www.microsoft.com/en-us/download/details.aspx?id=22276

When you run the installation, you will see the following license agreement:

When you check the box to accept the license agreement and click Install, you will eventually receive the following dialog box to let you know that the Office 2003 Web Components have been installed:

Once you have the Office 2003 Web Components installed, you can run the same query successfully:

logparser.exe "SELECT Date,

Statistics:

-----------

Note: The above query generates the following somewhat uninteresting chart:

That being said, the point of this blog was to let you know how to get charting back, not how to make pretty charts. I'll save pretty charts for a future blog. ;-]

Note: This blog was originally posted at http://blogs.msdn.com/robert_mcmurray/

Advanced Log Parser Charts Part 2 - Using Gradient Colors for Area Charts

29 January 2012 • by Bob • IIS, LogParser, Scripting